|

Splat-based Gradient-domain Fusion for Seamless View Transition

Dongyoung Choi, Jaemin Cho, Woohyun Kang, Hyunho Ha, James Tompkin, Min H. Kim

Proc. Int. Conf. 3D Vision 2026,

Vancouver, BC, Canada, Mar. 20--23, 2026

|

[PDF][Supp][BibTeX] |

| |

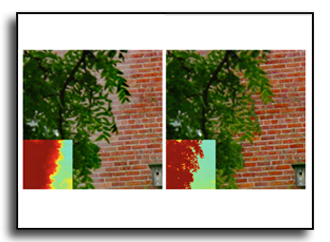

In sparse novel view synthesis with few input views and wide baselines, existing methods often fail due to weak geometric correspondences and view-dependent color inconsistencies. Splatting-based approaches can produce plausible results near training views, but they frequently overfit and struggle to maintain smooth, realistic appearance transitions in novel viewpoints. We introduce a splat-based gradient-domain fusion method that addresses these limitations. Our approach first establishes reliable dense geometry via two-view stereo for stable initialization. We then generate intermediate virtual views by reprojecting input images, which provide reference gradient fields for gradient-domain fusion. By blending these gradients, our method transfers low-frequency, view-dependent colors to the rendered Gaussians, producing seamless appearance transitions across views. Extensive experiments show that our approach consistently outperforms state-of-the-art sparse Gaussian splatting methods, delivering robust and perceptually plausible view synthesis. A comprehensive user study further confirms that our results are perceptually preferred, with significantly smoother and more realistic color transitions than existing methods.

|

|

|

Consistent Multi-Lane Tracking with Temporally Recursive Spline Modeling

Sanghyeon Lee, Donghun Kang, Min H. Kim

Proc. Int. Conf. Computer Vision, Theory and Applications (VISAPP 2026),

Marbella, Spain, Mar. 9 -- 11, 2026

|

[PDF][BibTeX] |

| |

Lane recognition and tracking are essential for autonomous driving, providing precise positioning and navigation data for vehicles. Existing single-image lane detection methods often falter in real-world conditions like poor lighting and occlusions. Video-based approaches, while leveraging sequential frames, typically lack continuity in lane tracking, leading to fragmented lane representations. We introduce a novel approach that addresses these challenges through temporally recursive spline modeling, a robust framework designed to maintain consistent, multi-lane tracking over time. Unlike traditional methods that limit tracking to adjacent lanes, our technique models lane trajectories as temporally recursive splines mapped in world space, capturing smooth lane continuity and enhancing long-term tracking fidelity across complex driving scenes. Our framework incorporates 2D image-based lane detections into a recursive spline model, facilitating accurate, real-time lane trajectory representation across frames. To ensure reliable lane association and continuity, we integrate a Kalman filter and an adaptive Hungarian algorithm, allowing our method to enhance baseline detectors and support consistent multi-lane tracking. Experimental results demonstrate that our temporally recursive spline modeling outperforms conventional approaches in lane detection and tracking metrics, achieving superior continuous lane recognition in challenging driving environments.

|

|

|

Diffusion-Based HDR Reconstruction from Mosaiced Exposure Images

Seeha Lee, Dongyoung Choi, Min H. Kim

Proc. Int. Conf. Computer Vision, Theory and Applications (VISAPP 2026),

Marbella, Spain, Mar. 9 -- 11, 2026

|

[PDF][BibTeX] |

| |

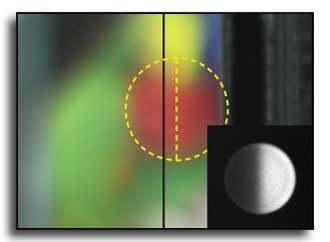

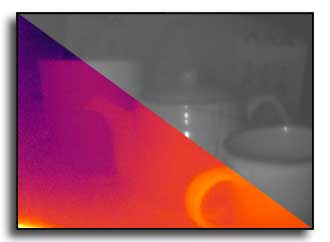

Snapshot-based HDR imaging from Bayer-patterned multi-exposure inputs has gained significant attention with recent advancements in HDR imaging technology. Learning-based approaches have enabled the reconstruction of HDR images from extremely sparse multi-exposure measurements captured on a single Bayer-patterned sensor. However, existing learning-based methods predominantly rely on tone-mapped representations due to the inherent challenges of direct supervision in the HDR radiance domain. This tone-mapping-based approach suffers from critical limitations, including amplified noise and structural distortions in the reconstructed HDR images. The fundamental challenge arises from the high dynamic range of HDR radiance values, which exhibit a sparse and uneven distribution in floating-point space, making gradient-based optimization unstable. To address these issues, we propose a novel diffusion-based HDR reconstruction framework that operates directly in a split HDR radiance domain while preserving the linearity of the original HDR radiance values. By leveraging the generative power of diffusion models, our approach effectively learns the structural and radiometric characteristics of HDR images, leading to superior detail preservation, reduced noise artifacts, and enhanced reconstruction fidelity. Experiments demonstrate that our method outperforms state-of-the-art techniques in both qualitative and quantitative evaluations.

|

|

|

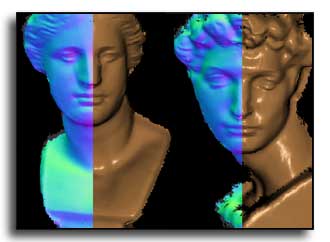

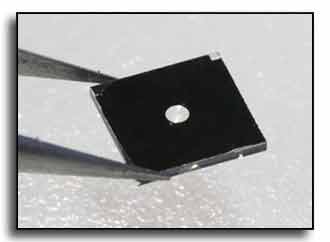

Designing and Fabricating Color BRDFs with Differentiable Wave Optics Designing and Fabricating Color BRDFs with Differentiable Wave Optics

Yixin Zeng, Kiseok Choi, Hadi Amata, Kaizhang Kang, Wolfgang Heidrich, Hongzhi Wu,

Min H. Kim

ACM Transactions on Graphics (TOG), presented at SIGGRAPH Asia 2025,

44(6), Dec. 15 – 18. 2025

|

[PDF][Supple][code]

[BibTeX] |

| |

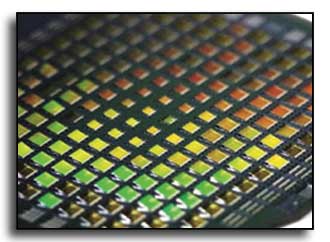

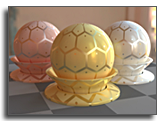

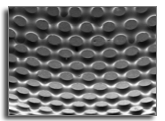

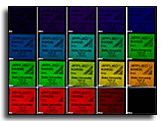

Modeling surface reflectance is central to connecting optical theory with real-world rendering and fabrication. While analytic BRDFs remain standard in rendering, recent advances in geometric and wave optics have expanded the design space for complex reflectance effects. However, existing wave-optics-based methods are limited to controlling reflectance intensity only, lacking the ability to design full-spectrum, color-dependent BRDFs. In this work, we present the first method for designing and fabricating color BRDFs using a fully differentiable wave optics framework. Our differentiable and memory-efficient simulation framework supports end-to-end optimization of microstructured surfaces under scalar diffraction theory, enabling joint control over both angular intensity and spectral color of reflectance. We leverage grayscale lithography with a feature size of 1.5--2.0\,$\mu$m to fabricate 15 BRDFs spanning four representative categories: anti-mirrors, pictorial reflections, structural colors, and iridescences. Compared to prior work, our approach achieves significantly higher fidelity and broader design flexibility, producing physically accurate and visually compelling results. By providing a practical and extensible solution for full-color BRDF design and fabrication, our method opens up new opportunities in structural coloration, product design, security printing, and advanced manufacturing.

|

|

|

Frame-Free Representation of Polarized Light for Resolving Stokes Vector Singularities Frame-Free Representation of Polarized Light for Resolving Stokes Vector Singularities

Shinyoung Yi, Jiwoong Na, Seungmin Hwang, Inseung Hwang, Min H. Kim

ACM Transactions on Graphics (TOG), presented at SIGGRAPH Asia 2025,

44(6), Dec. 15 – 18. 2025

|

[PDF][Supple][code]

[BibTeX] |

| |

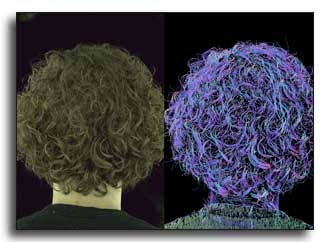

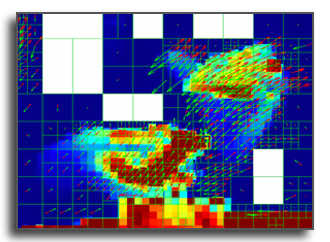

Stokes parameters are the standard representation of polarized light intensity in Mueller calculus and are widely used in polarization-aware computer graphics. However, their reliance on local frames--aligned with ray propagation directions--introduces a fundamental limitation: numerical discontinuities in Stokes vectors despite physically continuous fields of polarized light. This issue originates from the Hairy Ball Theorem, which guarantees unavoidable singularities in any frame-dependent function defined over spherical directional domains. In this paper, we overcome this long-standing challenge by introducing the first frame-free representation of Stokes vectors. Our key idea is to reinterpret a Stokes vector as a Dirac delta function over the directional domain and project it onto spin-2 spherical harmonics, retaining only the lowest-frequency coefficients. This compact representation supports coordinate-invariant interpolation and distance computation between Stokes vectors across varying ray directions--without relying on local frames. We demonstrate the advantages of our approach in two representative applications: spherical resampling of polarized environment maps (e.g., between cube map and equirectangular formats), and view synthesis from polarized radiance fields. In both cases, conventional frame-dependent methods produce singularity artifacts. In contrast, our frame-free representation eliminates these artifacts, improves numerical robustness, and simplifies implementation by decoupling polarization encoding from local frames.

|

|

|

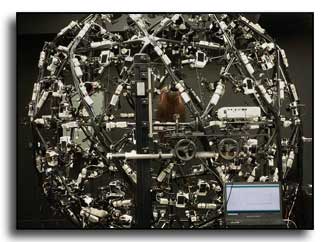

Hyperspectral Polarimetric BRDFs of Real-world Materials Hyperspectral Polarimetric BRDFs of Real-world Materials

Yunseong Moon, Ryota Maeda, Suhyun Shin, Inseung Hwang, Youngchan Kim, Min H. Kim, Seung-Hwan Baek

Proc. ACM SIGGRAPH Asia 2025,

Hong Kong, China, Dec. 15 – 18. 2025

|

[PDF][Data][code]

[BibTeX] |

| |

Acquiring bidirectional reflectance distribution functions (BRDFs) is essential for simulating light transport and analytically modeling material properties. Over the past two decades, numerous intensity-only BRDF datasets in the visible spectrum have been introduced, primarily for RGB image rendering applications. However, in scientific and engineering domains, there remains an unmet need to model light transport with polarization a fundamental wave property of light across hyperspectral bands. To address this gap, we present the first hyperspectral-polarimetric BRDF (hpBRDF) dataset, spanning wavelengths from 414 to 950 nm and densely sampled at 68 spectral bands. This dataset covers both the visible and near-infrared (NIR) spectra, enabling detailed material analysis and light reflection simulations that incorporate polarization at each narrow spectral band. We develop an efficient hpBRDF acquisition system that captures high-dimensional hpBRDFs within a practical acquisition time. Using this system, we analyze the hpBRDFs with respect to their dependencies on wavelength, polarization state, material type, and illumination/viewing geometry. We further perform numerical analyses, including principal component analysis (PCA) and the development of a neural hpBRDF representation for continuous modeling.

|

|

|

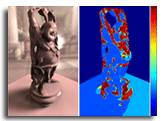

Splat-based 3D Scene Reconstruction with Extreme Motion-blur Splat-based 3D Scene Reconstruction with Extreme Motion-blur

Hyeonjoong Jang, Dongyoung Choi, Donggun Kim, Woohyun Kang, Min H. Kim

Proc. IEEE/CVF International Conference on Computer Vision (ICCV 2025)

Honolulu, HI, United States, Oct. 19 – 23, 2025

|

[PDF][Supple][BibTeX] |

| |

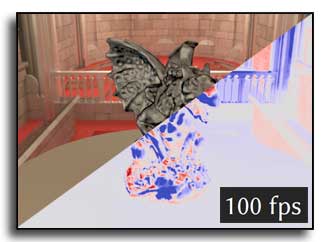

We propose a splat-based 3D scene reconstruction method from RGB-D input that effectively handles extreme motion blur, a frequent challenge in low-light environments. Under dim illumination, RGB frames often suffer from severe motion blur due to extended exposure times, causing traditional camera pose estimation methods, such as COLMAP, to fail. This results in inaccurate camera pose and blurry color input, compromising the quality of 3D reconstructions. Although recent 3D reconstruction techniques like Neural Radiance Fields and Gaussian Splatting have demonstrated impressive results, they rely on accurate camera trajectory estimation, which becomes challenging under fast motion or poor lighting conditions. Furthermore, rapid camera movement and the limited field of view of depth sensors reduce point cloud overlap, limiting the effectiveness of pose estimation with the ICP algorithm. To address these issues, we introduce a method that combines camera pose estimation and image deblurring using a Gaussian Splatting framework, leveraging both 3D Gaussian splats and depth inputs for enhanced scene representation. Our method first aligns consecutive RGB-D frames through optical flow and ICP, then refines camera poses and 3D geometry by adjusting Gaussian positions for optimal depth alignment. To handle motion blur, we model camera movement during exposure and deblur images by comparing the input with a series of sharp, rendered frames. Experiments on a new RGB-D dataset with extreme motion blur show that our method outperforms existing approaches, enabling high-quality reconstructions even in challenging conditions. This approach has broad implications for 3D mapping applications in robotics, autonomous navigation, and augmented reality. Both code and dataset will be publicly available.

|

|

|

Benchmarking Burst Super-Resolution for Polarization Images: Noise Dataset and Analysis Benchmarking Burst Super-Resolution for Polarization Images: Noise Dataset and Analysis

Inseung Hwang, Kiseok Choi, Hyunho Ha, Min H. Kim

Proc. IEEE/CVF International Conference on Computer Vision (ICCV 2025)

Honolulu, HI, United States, Oct. 19 – 23, 2025

|

[PDF][Supple][BibTeX] |

| |

Snapshot polarization imaging calculates polarization states from linearly polarized subimages. To achieve this, a polarization camera employs a double Bayer-patterned sensor to capture both color and polarization. It demonstrates low light efficiency and low spatial resolution, resulting in increased noise and compromised polarization measurements. Although burst super-resolution effectively reduces noise and enhances spatial resolution, applying it to polarization imaging poses challenges due to the lack of tailored datasets and reliable ground truth noise statistics. To address these issues, we introduce PolarNS and PolarBurstSR, two innovative datasets developed specifically for polarization imaging. PolarNS provides characterization of polarization noise statistics, facilitating thorough analysis, while PolarBurstSR functions as a benchmark for burst super-resolution in polarization images. These datasets, collected under various real-world conditions, enable comprehensive evaluation. Additionally, we present a model for analyzing polarization noise to quantify noise propagation, tested on a large dataset captured in a darkroom environment. As part of our application, we compare the latest burst super-resolution models, highlighting the advantages of training tailored to polarization compared to RGB-based methods. This work establishes a benchmark for polarization burst super-resolution and offers critical insights into noise propagation, thereby enhancing polarization image reconstruction.

|

|

|

Geometry-guided Online 3D Video Synthesis with Multi-View Temporal Consistency Geometry-guided Online 3D Video Synthesis with Multi-View Temporal Consistency

Hyunho Ha, Lei Xiao, Christian Richardt, Thu Nguyen-Phuoc, Changil Kim, Min H. Kim, Douglas Lanman, Numair Khan

Proc. IEEE/CVF Computer Vision and Pattern Recognition (CVPR 2025)

Nashville, TN, United States, Jun. 11--15, 2025

|

[PDF][BibTeX] |

| |

We introduce a novel geometry-guided online video view synthesis method with enhanced view and temporal consistency. Traditional approaches achieve high-quality synthesis from dense multi-view camera setups but require significant computational resources. In contrast, selective-input methods reduce this cost but often compromise quality, leading to multi-view and temporal inconsistencies such as flickering artifacts. Our method addresses this challenge to deliver efficient, high-quality novel-view synthesis with view and temporal consistency. The key innovation of our approach lies in using global geometry to guide an image-based rendering pipeline. To accomplish this, we progressively refine depth maps using color difference masks across time. These depth maps are then accumulated through truncated signed distance fields (TSDF) in the synthesized view's image space. This depth representation is view and temporally consistent, and is used to guide a pre-trained blending network that fuses multiple forward-rendered input-view images. Thus, the network is encouraged to output geometrically consistent synthesis results across multiple views and time. Our approach achieves consistent, high-quality video synthesis, while running efficiently in an online manner.

|

|

|

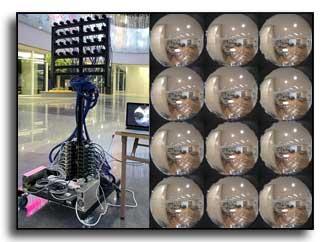

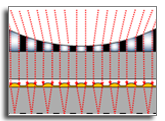

Biologically-inspired Microlens Array Camera for High-speed and High-sensitivity Imaging

Hyun-Kyung Kim, Young-Gil Cha, Jae-Myeong Kwon, Sang-In Bae, Kisoo Kim, Kyung-Won Jang, Yong-Jin Jo, Min H. Kim, Ki-Hun Jeong

AAAS Science Advances,

Jan. 1, 2025

|

[PDF][BibTeX] |

| |

Nocturnal and crepuscular fast-eyed insects often exploit multiple optical channels and temporal summation for fast and low-light imaging. Here we report high-speed and high-sensitive microlens array camera (HS-MAC), inspired by multiple optical channels and temporal summation for insect vision. HS-MAC features crosstalk-free offset microlens arrays on a single rolling shutter CMOS image sensor and performs high-speed and high-sensitivity imaging by employing channel fragmentation, temporal summation, and compressive frame reconstruction. The experimental results demonstrate that HS-MAC accurately measures the speed of a color disk rotating at 1,950 rpm, recording fast sequences at 9,120 fps with low noise equivalent irradiance (0.43 µW/cm²). Besides, HS-MAC visualizes the necking pinch-off of a pool fire flame in dim light conditions below one-thousandth of a lux. The compact high-speed low-light camera can offer a distinct route for high-speed and low-light imaging in mobile, surveillance, and biomedical applications.

|

|

|

Editing Scene Illumination and Material Appearance

of Light-Field Images

Jaemin Cho, Dongyoung Choi, Dahyun Kang, Gun Bang, Min H. Kim

Proc. International Conference on Computer Vision Theory and Applications (VISAPP 2025),

Porto, Portugal, Feb. 26--28, 2025

|

[PDF][BibTeX] |

| |

In this paper, we propose a method for editing the scene appearance of light-field images. Our method enables

users to manipulate the illumination and material properties of scenes captured in light-field format, offering

various control over image appearance, including dynamic relighting and material appearance modification,

which leverages our specially designed inverse rendering framework for light-field images. By effectively

separating light fields into appearance parameters—such as diffuse albedo, normal, specular intensity, and

roughness within a multi-plane image domain, we overcome the traditional challenges of light-field imaging

decomposition. These challenges include handling front-parallel views and a limited image count, which

have previously hindered neural inverse rendering networks when applying them to light-field image data.

Our method also approximates environmental illumination using spherical Gaussians, significantly enhancing

the realism of scene reflectance. Furthermore, by differentiating scene illumination into far-bound and nearbound

light environments, our method enables highly realistic editing of scene appearance and illumination,

especially for local illumination effects. This differentiation allows for efficient, real-time relighting rendering

and integrates seamlessly with existing layered light-field rendering frameworks. Our method demonstrates

rendering capabilities from casually captured light-field images.

|

|

|

Joint Calibration of Cameras and Projectors for Multiview Phase Measuring Profilometry

Hyeongjun Cho, Min H. Kim

Proc. International Conference on Computer Vision Theory and Applications (VISAPP 2025),

Porto, Portugal, Feb. 26--28, 2025

|

[PDF][Supple][BibTeX] |

| |

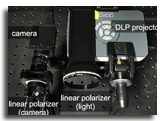

Existing camera-projector calibration for phase-measuring profilometry (PMP) is valid for only a single view.

To extend a single-view PMP to a multiview system, an additional calibration, such as Zhang’s method, is necessary.

In addition to calibrating phase-to-height relationships for each view, calibrating parameters of multiple

cameras, lenses, and projectors by rotating a target is indeed cumbersome and often fails with the local optima

of calibration solutions. In this work, to make multiview PMP calibration more convenient and reliable, we

propose a joint calibration method by combining these two calibration modalities of phase-measuring profilometry

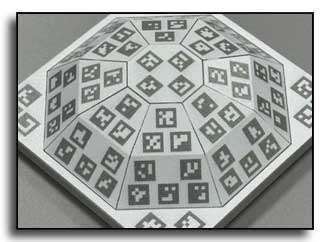

and multiview geometry with high accuracy. To this end, we devise (1) a novel compact, static

calibration target with planar surfaces of different orientations with fiducial markers and (2) a joint multiview

optimization scheme of the projectors and the cameras, handling nonlinear lens distortion. First, we automatically

detect the markers to estimate plane equation parameters of different surface orientations. We then

solve homography matrices of multiple planes through target-aware bundle adjustment. Given unwrapped

phase measurement, we calibrate intrinsic/extrinsic/lens-distortion parameters of every camera and projector

without requiring any manual interaction with the calibration target. Only one static scene is required for

calibration. Results validate that our calibration method enables us to combine multiview PMP measurements

with high accuracy.

|

|

|

Polarimetric BSSRDF Acquisition of Dynamic Faces Polarimetric BSSRDF Acquisition of Dynamic Faces

Hyunho Ha, Inseung Hwang, Nestor Monzon, Jaemin Cho, Donggun Kim, Seung-Hwan Baek, Adolfo Muñoz, Diego Gutierrez, Min H. Kim

ACM Transactions on Graphics (TOG), presented at SIGGRAPH Asia 2024,

43(6), Dec. 3 -- 6, 2024

|

[PDF][Supple][code]

[BibTeX] |

| |

Acquisition and modeling of polarized light reflection and scattering help reveal the shape, structure, and physical characteristics of an object, which is increasingly important in computer graphics.

However, current polarimetric acquisition systems are limited to static and opaque objects.

Human faces, on the other hand, present a particularly difficult challenge, given their complex structure and reflectance properties, the strong presence of spatially-varying subsurface scattering, and their dynamic nature.

We present a new polarimetric acquisition method for dynamic human faces, which focuses on capturing spatially varying appearance and precise geometry, across a wide spectrum of skin tones and facial expressions. It includes both single and heterogeneous subsurface scattering, index of refraction, and specular roughness and intensity, among other parameters, while revealing biophysically-based components such as inner- and outer-layer hemoglobin, eumelanin and pheomelanin.

Our method leverages such components' unique multispectral absorption profiles to quantify their concentrations, which in turn inform our model about the complex interactions occurring within the skin layers.

To our knowledge, our work is the first to simultaneously acquire polarimetric and spectral reflectance information alongside biophysically-based skin parameters and geometry of dynamic human faces.

Moreover, our polarimetric skin model integrates seamlessly into various rendering pipelines.

|

|

|

Spin-Weighted Spherical Harmonics for Polarized Light Transport Spin-Weighted Spherical Harmonics for Polarized Light Transport

Shinyoung Yi, Donggun Kim, Jiwoong Na, Xin Tong, Min H. Kim

ACM Transactions on Graphics (TOG), presented at SIGGRAPH 2024,

43(4), Jul. 28 - Aug. 1, 2024

|

[PDF][Supple][code]

[BibTeX] |

| |

The objective of polarization rendering is to simulate the interaction of light with materials exhibiting polarization-dependent behavior. However, integrating polarization into rendering is challenging and increases computational costs significantly. The primary difficulty lies in efficiently modeling and computing the complex reflection phenomena associated with polarized light. Specifically, frequency-domain analysis, essential for efficient environment lighting and storage of complex light interactions, is lacking. To efficiently simulate and reproduce polarized light interactions using frequency-domain techniques, we address the challenge of maintaining continuity in polarized light transport represented by Stokes vectors within angular domains. The conventional spherical harmonics method cannot effectively handle continuity and rotation invariance for Stokes vectors. To overcome this, we develop a new method called polarized spherical harmonics (PSH) based on the spin-weighted spherical harmonics theory. Our method provides a rotation-invariant representation of Stokes vector fields. Furthermore, we introduce frequency domain formulations of polarized rendering equations and spherical convolution based on PSH. We first define spherical convolution on Stokes vector fields in the angular domain, and it also provides efficient computation of polarized light transport, nearly on an entry-wise product in the frequency domain. Our frequency domain formulation, including spherical convolution, led to the development of the first real-time polarization rendering technique under polarized environmental illumination, named precomputed polarized radiance transfer, using our polarized spherical harmonics. Results demonstrate that our method can effectively and accurately simulate and reproduce polarized light interactions in complex reflection phenomena, including polarized environmental illumination and soft shadows.

|

|

|

OmniLocalRF: Omnidirectional Local Radiance Fields from Dynamic Videos OmniLocalRF: Omnidirectional Local Radiance Fields from Dynamic Videos

Dongyoung Choi, Hyeonjoong Jang, Min H. Kim

Proc. IEEE/CVF Computer Vision and Pattern Recognition (CVPR 2024)

Seattle, United States, Jun. 17 – 21, 2024

|

[PDF][Supple][code]

[BibTeX] |

| |

Omnidirectional cameras are extensively used in various applications to provide a wide field of vision. However, they face a challenge in synthesizing novel views due to the inevitable presence of dynamic objects, including the photographer, in their wide field of view. In this paper, we introduce a new approach called Omnidirectional Local Radiance Fields (OmniLocalRF) that can render static-only scene views, removing and inpainting dynamic objects simultaneously. Our approach combines the principles of local radiance fields with the bidirectional optimization of omnidirectional rays. Our input is an omnidirectional video, and we evaluate the mutual observations of the entire angle between the previous and current frames. To reduce ghosting artifacts of dynamic objects and inpaint occlusions, we devise a multi-resolution motion mask prediction module. Unlike existing methods that primarily separate dynamic components through the temporal domain, our method uses multi-resolution neural feature planes for precise segmentation, which is more suitable for long 360{\degree} videos. Our experiments validate that OmniLocalRF outperforms existing methods in both qualitative and quantitative metrics, especially in scenarios with complex real-world scenes. In particular, our approach eliminates the need for manual interaction, such as drawing motion masks by hand and additional pose estimation, making it a highly effective and efficient solution.

|

|

|

OmniSDF: Scene Reconstruction using Omnidirectional Signed Distance Functions and Adaptive Binoctrees OmniSDF: Scene Reconstruction using Omnidirectional Signed Distance Functions and Adaptive Binoctrees

Hakyeong Kim, Andreas Meuleman, Hyeonjoong Jang, James Tompkin, Min H. Kim

Proc. IEEE/CVF Computer Vision and Pattern Recognition (CVPR 2024)

Seattle, United States, Jun. 17 – 21, 2024

|

[PDF][Supple][code]

[BibTeX] |

| |

We present a method to reconstruct indoor and outdoor static scene geometry and appearance from an omnidirectional video moving in a small circular sweep. This setting is challenging because of the small baseline and large depth ranges. These create large variance in the estimation of ray crossings, and make optimization of the surface geometry challenging. To better constrain the optimization, we estimate the geometry as a signed distance field within a spherical binoctree data structure, and use a complementary efficient tree traversal strategy based on breadth-first search for sampling. Unlike regular grids or trees, the shape of this structure well-matches the input camera setting, creating a better trade-off in the memory-quality-compute space. Further, from an initial dense depth estimate, the binoctree is adaptively subdivided throughout optimization. This is different from previous methods that may use a fixed depth, leaving the scene undersampled. In comparisons with three current methods (one neural optimization and two non-neural), our method shows decreased geometry error on average, especially in a detailed scene, while requiring orders of magnitude fewer cells than naive grids for the same minimum voxel size.

|

|

|

Are Multi-view Edges Incomplete for Depth Estimation? Are Multi-view Edges Incomplete for Depth Estimation?

Numair Khan, Min H. Kim, James Tompkin

International Journal of Computer Vision (IJCV)

Published in 2024

|

[PDF][BibTeX][project] |

| |

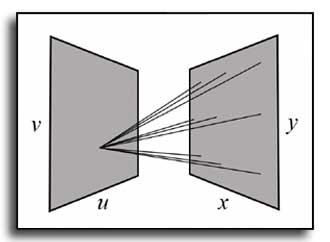

Depth estimation tries to obtain 3D scene geometry from low-dimensional data like 2D images. This is a vital operation in computer vision and any general solution must preserve all depth information of potential relevance to support higher-level tasks. For scenes with well-defined depth, this work shows that multi-view edges can encode all relevant information—that multi-view edges are complete. For this, we follow Elder’s complementary work on the completeness of 2D edges for image reconstruction. We deploy an image-space geometric representation: an encoding of multi-view scene edges as constraints and a diffusion reconstruction method for inverting this code into depth maps. Due to inaccurate constraints, diffusion-based methods have previously underperformed against deep learning methods; however, we will reassess the value of diffusion-based methods and show their competitiveness without requiring training data. To begin, we work with structured light fields and Epipolar Plane Images (EPIs). EPIs present high-gradient edges in the angular domain: with correct processing, EPIs provide depth constraints with accurate occlusion boundaries and view consistency. Then, we present a differentiable representation form that allows the constraints and the diffusion reconstruction to be optimized in an unsupervised way via a multi-view reconstruction loss. This is based around point splatting via radiative transport, and extends to unstructured multi-view images. We evaluate our reconstructions for accuracy, occlusion handling, view consistency, and sparsity to show that they retain the geometric information required for higher-level tasks.

|

|

|

Self-Calibrating, Fully Differentiable NLOS Inverse Rendering Self-Calibrating, Fully Differentiable NLOS Inverse Rendering

Kiseok Choi, Inchul Kim, Dongyoung Choi, Julio Marco, Diego Gutierrez, Min H. Kim

Proc. ACM SIGGRAPH Asia 2023

Sydney, NSW, Australia, December 12 -- 15, 2023

|

[PDF][Supple][Code]

[BibTeX] |

| |

Existing time-resolved non-line-of-sight (NLOS) imaging methods reconstruct hidden scenes by inverting the optical paths of indirect illumination measured at visible relay surfaces. These methods are prone to reconstruction artifacts due to inversion ambiguities and capture noise, which are typically mitigated through the manual selection of filtering functions and parameters. We introduce a fully-differentiable end-to-end NLOS inverse rendering pipeline that self-calibrates the imaging parameters during the reconstruction of hidden scenes, using as input only the measured illumination while working both in the time and frequency domains. Our pipeline extracts a geometric representation of the hidden scene from NLOS volumetric intensities and estimates the time-resolved illumination at the relay wall produced by such geometric information using differentiable transient rendering. We then use gradient descent to optimize imaging parameters by minimizing the error between our simulated time-resolved illumination and the measured illumination. Our end-to-end differentiable pipeline couples diffraction-based volumetric NLOS reconstruction with path-space light transport and a simple ray marching technique to extract detailed, dense sets of surface points and normals of hidden scenes.We demonstrate the robustness of our method to consistently reconstruct geometry and albedo, even under significant noise levels.

|

|

|

Joint Demosaicing and Deghosting of Time-Varying Exposures

for Single-Shot HDR Imaging Joint Demosaicing and Deghosting of Time-Varying Exposures

for Single-Shot HDR Imaging

Jungwoo Kim, Min H. Kim

Proc. IEEE/CVF International Conference on Computer Vision (ICCV 2023)

Paris, France, Oct. 4 -- 6, 2023

|

[PDF][Supple][Code]

[BibTeX] |

| |

The quad-Bayer patterned image sensor has made significant improvements in spatial resolution over recent years due to advancements in image sensor technology. This has enabled single-shot high-dynamic-range (HDR) imaging using spatially varying multiple exposures. Popular methods for multi-exposure array sensors involve varying the gain of each exposure, but this does not effectively change the photoelectronic energy in each exposure. Consequently, HDR images produced using gain-based exposure variation may suffer from noise and details being saturated. To address this problem, we intend to use time-varying exposures in quad-Bayer patterned sensors. This approach allows long-exposure pixels to receive more photon energy than short- or middle-exposure pixels, resulting in higher-quality HDR images. However, time-varying exposures are not ideal for dynamic scenes and require an additional deghosting method. To tackle this issue, we propose a single-shot HDR demosaicing method that takes time-varying multiple exposures as input and jointly solves both the demosaicing and deghosting problems. Our method uses a feature-extraction module to handle mosaiced multiple exposures and a multiscale transformer module to register spatial displacements of multiple exposures and colors. We also created a dataset of quad-Bayer sensor input with time-varying exposures and trained our network using this dataset. Results demonstrate that our method outperforms baseline HDR reconstruction methods with both synthetic and real datasets. With our method, we can achieve high-quality HDR images in challenging lighting conditions.

|

|

|

Microlens array camera with variable apertures for single-shot high dynamic range (HDR) imaging

Young-Gil Cha, Jiwoong Na, Hyun-Kyung Kim, Jae-Myeong Kwon, Seok-Haeng Huh, Seung-Un Jo, Chang-Hwan Kim, Min H. Kim, Ki-Hun Jeong

Optics Express (OE)

Vol. 31, Issue 18, pp. 29589-29595 (2023)

|

[PDF][BibTeX] |

| |

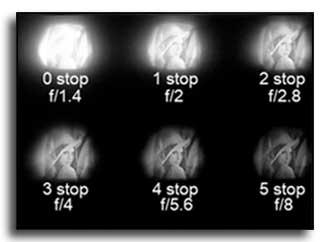

We report a microlens array camera with variable apertures (MACVA) for high dynamic range (HDR) imaging by using microlens arrays with various sizes of apertures. The MACVA comprises variable apertures, microlens arrays, gap spacers, and a CMOS image sensor. The microlenses with variable apertures capture low dynamic range (LDR) images with different f-stops under single-shot exposure. The reconstructed HDR images clearly exhibit expanded dynamic ranges surpassing LDR images as well as high resolution without motion artifacts, comparable to the maximum MTF50 value observed among the LDR images. This compact camera provides, what we believe to be, a new perspective for various machine vision or mobile devices applications.

|

|

Spatio-Focal Bidirectional Disparity Estimation from a Dual-Pixel Image Spatio-Focal Bidirectional Disparity Estimation from a Dual-Pixel Image

Donggun Kim, Hyeonjoong Jang, Inchul Kim, Min H. Kim

Proc. IEEE/CVF Computer Vision and Pattern Recognition (CVPR 2023)

Vancouver, Canada, Jun. 18 - 22, 2023

|

[PDF][Supple][code]

[BibTeX] |

| |

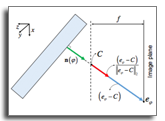

Dual-pixel photography introduces a new era of monoc- ular RGB-D photography with ultra-high resolution, en- abling many applications in computational photography. However, to fully utilize dual-pixel photography, several challenges still remain. Unlike the conventional stereo pair, the dual pixel exhibits a bidirectional disparity that includes both positive and negative values, depending on the focus-plane depth in an image. Furthermore, captur- ing a wide range of dual-pixel disparity requires a shallow depth of field, resulting in a severely blurred image, degrad- ing depth estimation performance. Recently, several data- driven approaches have been proposed to mitigate these two challenges. However, due to the lack of the ground- truth dataset of the bidirectional dual-pixel disparity, ex- isting data-driven methods estimate unidirectional informa- tion only, either inverse depth or blurriness map. In this work, we propose a self-supervised learning method that learns bidirectional disparity from anisotropic blur kernels in dual-pixel photography. Our method does not rely on a training dataset of bidirectional disparity that does not ex- ist yet. Our method can estimate a complete bidirectional disparity map with respect to the focus-plane depth from a dual-pixel image, outperforming the baseline dual-pixel methods.

|

|

|

Polarimetric iToF: Measuring High-Fidelity Depth through Scattering Media Polarimetric iToF: Measuring High-Fidelity Depth through Scattering Media

Daniel S. Jeon, Andreas Meuleman, Seung-Hwan Baek, Min H. Kim

Proc. IEEE/CVF Computer Vision and Pattern Recognition (CVPR 2023)

selected as CVPR Highlights (10% of the accepted papers)

Vancouver, Canada, Jun. 18 - 22, 2023

|

[PDF][Supple][BibTeX] |

| |

Indirect time-of-flight (iToF) imaging allows us to capture dense depth information at a low cost. However, iToF imag- ing often suffers from multipath interference (MPI) arti- facts in the presence of scattering media, resulting in se- vere depth-accuracy degradation. For instance, iToF cam- eras cannot measure depth accurately through fog because ToF active illumination scatters back to the sensor before reaching the farther target surface. In this work, we pro- pose a polarimetric iToF imaging method that can capture depth information robustly through scattering media. Our observations on the principle of indirect ToF imaging and polarization of light allow us to formulate a novel computa- tional model of scattering-aware polarimetric phase mea- surements that enables us to correct MPI errors. We first devise a scattering-aware polarimetric iToF model that can estimate the phase of unpolarized backscattered light. We then combine the optical filtering of polarization and our computational modeling of unpolarized backscattered light via scattering analysis of phase and amplitude. This allows us to tackle the MPI problem by estimating the scattering energy through the participating media. We validate our method on an experimental setup using a customized off- the-shelf iToF camera. Our method outperforms baseline methods by a significant margin by means of our scattering model and polarimetric phase measurements.

|

|

|

Progressively Optimized Local Radiance Fields for Robust View Synthesis Progressively Optimized Local Radiance Fields for Robust View Synthesis

Andreas Meuleman, Yu-Lun Liu, Chen Gao, Jia-Bin Huang, Changil Kim, Min H. Kim, Johannes Kopf

Proc. IEEE/CVF Computer Vision and Pattern Recognition (CVPR 2023)

Vancouver, Canada, Jun. 18 - 22, 2023

|

[PDF][Supple][Code]

[BibTeX] |

| |

We present an algorithm for reconstructing the radiance field of a large-scale scene from a single casually captured video. The task poses two core challenges. First, most existing radiance field reconstruction approaches rely on accurate pre-estimated camera poses from Structurefrom-Motion algorithms, which frequently fail on in-thewild videos. Second, using a single, global radiance field with finite representational capacity does not scale to longer trajectories in an unbounded scene. For handling unknown poses, we jointly estimate the camera poses with radiance field in a progressive manner. We show that progressive optimization significantly improves the robustness of the re-construction. For handling large unbounded scenes, we dynamically allocate new local radiance fields trained with frames within a temporal window. This further improves robustness (e.g., performs well even under moderate pose drifts) and allows us to scale to large scenes. Our extensive evaluation on the TANKS AND TEMPLES dataset and our collected outdoor dataset, STATIC HIKES, show that our approach compares favorably with the state-of-the-art.

|

|

|

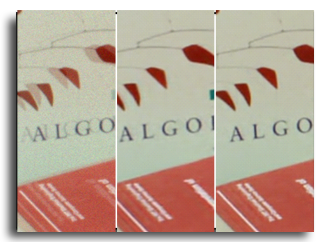

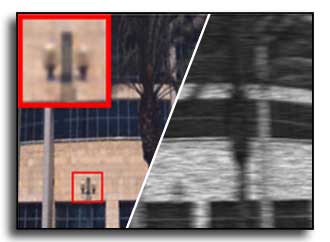

Automated Visual Inspection of Defects in Transparent Display Layers using Light-Field 3D Imaging

Hyeonjoong Jang, Sanghoon Cho, Daniel S. Jeon, Dahyun Kang, Myeongho Song, Changhyun Park, Jaewon Kim, Min H. Kim

IEEE Transactions on Semiconductor Manufacturing (TSM)

Published on May 26, 2023

|

[PDF][BibTeX] |

| |

Since a display panel comprises multiple layered components, defects may occur within different layers through manufacturing processes. Traditional visual inspection systems with a 2D camera cannot identify the occurrence location among layers. Several 3D imaging technologies, such as CT, TSOM, and MRI, suffer from slow performance and a large form factor. In this work, we propose a novel visual inspection method to detect defects on a display panel using light-field 3D imaging. Without powering the target display panel, we first acquire the high-resolution depth information of defects located inside the transparent layers. We then convert the depth information to the object coordinate system to estimate the physical locations of defects. We automatically classify the types of defects and their layer locations along the depth axis in multiple transparent layers of the display panel. Lastly, our experimental results validate that our method can successfully detect and classify various display defects.

|

|

|

Actively Tunable Spectral Filter for Compact Hyperspectral Camera using Angle-Sensitive Plasmonic Structures

Myeong-Su Ahn, Jaehun Jeon, Charles Soon Hong Hwang, Daniel S. Jeon,

Min H. Kim, Ki-Hun Jeong

Advanced Materials Technologies

2201482, published on April 4, 2023

|

[PDF][BibTeX] |

| |

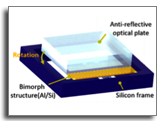

Hyperspectral imaging provides enhanced classification and identification of veiled features for diverse biomedical applications such as label-free cancer detection or non-invasive vascular disease diagnostics. However, hyperspectral cameras still have technical limitations in miniaturization due to the inherently complex and bulky configurations of conventional tunable filters. Herein, a compact hyperspectral camera using an active plasmonic tunable filter (APTF) with electrothermally driven spectral modulation for feature-augmented imaging is reported. APTF consists of angle-sensitive plasmonic structures (APS) over an electrothermal MEMS (Microelectromechanical systems) actuator, fabricated by combining nanoimprint lithography, and MEMS fabrication ona 6-inch wafer. APS have a complementary configuration of Au nanohole and nanodisk arrays supported on asilicon nitride membrane. APTF shows a large angular motion at operational voltages of 5–9 VDC for continuous spectral modulation between 820 and 1000 nm (45 nm/V). The compact hyperspectral camera was fully packaged with a linear polarizer, APTF and amonochromatic camera, exhibiting asize of 16 mm (ϕ) × 9.5 mm (h). Feature-augmented images of subcutaneous vein and a fresh fruit have been successfully demonstrated after the hyperspectral reconstruction and spectral feature extraction. This functional camera provides a new compact platform for point-of-care or in vivo hyperspectral imaging in biomedicalapplications.

|

|

|

Sparse Ellipsometry: Portable Acquisition of Polarimetric SVBRDF and Shape with Unstructured Flash Photography Sparse Ellipsometry: Portable Acquisition of Polarimetric SVBRDF and Shape with Unstructured Flash Photography

Inseung Hwang, Daniel S. Jeon, Adolfo Muñoz, Diego Gutierrez, Xin Tong, Min H. Kim

ACM Transactions on Graphics (TOG), presented at SIGGRAPH 2022,

SIGGRAPH Technical Paper Award Honorable Mention

41(4), Aug. 8 - Aug. 11, 2022 |

[PDF][Supple][Code]

[BibTeX] |

| |

Ellipsometry techniques allow to measure polarization information of materials, requiring precise rotations of optical components with different configurations of lights and sensors. This results in cumbersome capture devices, carefully calibrated in lab conditions, and in very long acquisition times, usually in the order of a few days per object. Recent techniques allow to capture polarimetric spatially-varying reflectance information, but limited to a single view, or to cover all view directions, but limited to spherical objects made of a single homogeneous material.We present sparse ellipsometry, a portable polarimetric acquisition method that captures both polarimetric SVBRDF and 3D shape simultaneously. Our handheld device consists of off-the-shelf, fixed optical components. Instead of days, the total acquisition time varies between twenty and thirty minutes per object. We develop a complete polarimetric SVBRDF model that includes diffuse and specular components, as well as single scattering, and devise a novel polarimetric inverse rendering algorithm with data augmentation of specular reflection samples via generative modeling. Our results show a strong agreement with a recent ground-truth dataset of captured polarimetric BRDFs of real-world objects.

|

|

|

Egocentric Scene Reconstruction From an Omnidirectional Video Egocentric Scene Reconstruction From an Omnidirectional Video

Hyeonjoong Jang, Andréas Meuleman, Dahyun Kang, Donggun Kim, Christian Richardt,

Min H. Kim

ACM Transactions on Graphics (TOG), presented at SIGGRAPH 2022

41(4), Aug. 8 - Aug. 11, 2022 |

[PDF][Supple][Code]

[BibTeX] |

| |

Omnidirectional videos capture environmental scenes effectively, but they have rarely been used for geometry reconstruction. In this work, we propose an egocentric 3D reconstruction method that can acquire scene geometry with high accuracy from a short egocentric omnidirectional video. To this end, we first estimate per-frame depth using a spherical disparity network. We then fuse per-frame depth estimates into a novel spherical binoctree data structure that is specifically designed to tolerate spherical depth estimation errors. By subdividing the spherical space into binary tree and octree nodes that represent spherical frustums adaptively, the spherical binoctree effectively enables egocentric surface geometry reconstruction for environmental scenes while simultaneously assigning high-resolution nodes for closely observed surfaces. This allows to reconstruct an entire scene from a short video captured with a small camera trajectory. Experimental results validate the effectiveness and accuracy of our approach for reconstructing the 3D geometry of environmental scenes from short egocentric omnidirectional video inputs. We further demonstrate various applications using a conventional omnidirectional camera, including novel-view synthesis, object insertion, and relighting of scenes using reconstructed 3D models with texture.

|

|

|

FloatingFusion: Depth from ToF and Image-stabilized Stereo Cameras FloatingFusion: Depth from ToF and Image-stabilized Stereo Cameras

Andreas Meuleman, Hakyeong Kim, James Tompkin, Min H. Kim

Proc. European Conference on Computer Vision (ECCV 2022)

Tel Aviv, Oct. 23 – 27, 2022 |

[PDF][Supple][BibTeX] |

| |

High-accuracy per-pixel depth is vital for computational photography, so smartphones now have multimodal camera systems with time-of-flight (ToF) depth sensors and multiple color cameras. However, producing accurate high-resolution depth is still challenging due to the low resolution and limited active illumination power of ToF sensors. Fusing RGB stereo and ToF information is a promising direction to overcome these issues, but a key problem remains: to provide high-quality 2D RGB images, the main color sensor’s lens is optically stabilized, resulting in an unknown pose for the floating lens that breaks the geometric relationships between the multimodal image sensors. Leveraging ToF depth estimates and a wide-angle RGB camera, we design an automatic calibration technique based on dense 2D/3D matching that can estimate camera extrinsic, intrinsic, and distortion parameters of a stabilized main RGB sensor from a single snapshot. This lets us fuse stereo and ToF cues via a correlation volume. For fusion, we apply deep learning via a real-world training dataset with depth supervision estimated by a neural reconstruction method. For evaluation, we acquire a test dataset using a commercial high-power depth camera and show that our approach achieves higher accuracy than existing baselines.

|

|

|

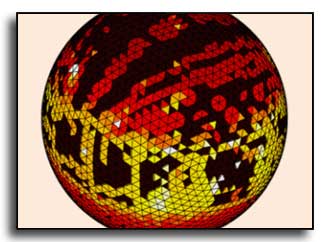

Uniform Subdivision of Omnidirectional Camera Space for Efficient Spherical Stereo Matching Uniform Subdivision of Omnidirectional Camera Space for Efficient Spherical Stereo Matching

Donghun Kang, Hyeonjoong Jang, Jungeon Lee, Chong-Min Kyung, Min H. Kim

Proc. IEEE Computer Vision and Pattern Recognition (CVPR 2022)

New Orleans, USA, Jun. 19 - 24, 2022 |

[PDF][Suppl.][BibTeX] |

| |

Omnidirectional cameras have been used widely to better understand surrounding environments. They are often configured as stereo to estimate depth. However, due to the optics of the fisheye lens, conventional epipolar geometry is inapplicable directly to omnidirectional camera images. Intermediate formats of omnidirectional images, such as equirectangular images, have been used. However, stereo matching performance on these image formats has been lower than the conventional stereo due to severe image distortion near pole regions. In this paper, to address the distortion problem of omnidirectional images, we devise a novel subdivision scheme of a spherical geodesic grid. This enables more isotropic patch sampling of spherical image information in the omnidirectional camera space. By extending the existing equal-arc scheme, our spherical geodesic grid is tessellated with an equal-epiline subdivision scheme, making the cell sizes and in-between distances as uniform as possible, i.e., the arc length of the spherical grid cell’s edges is well regularized. Also, our uniformly tessellated coordinates in a 2D image can be transformed into spherical coordinates via oneto- one mapping, allowing for analytical forward/backward transformation. Our uniform tessellation scheme achieves a higher accuracy of stereo matching than the traditional cylindrical and cubemap-based approaches, reducing the memory footage required for stereo matching by 20%.

|

|

|

Differentiable Appearance Acquisition from a Flash/No-flash RGB-D Pair

Hyun Jin Ku, Hyunho Ha, Joo Ho Lee, Dahyun Kang, James Tompkin, Min H. Kim

Proc. IEEE International Conference on Computational Photography (ICCP 2022)

Caltech, Pasadena, August 1-3, 2022 |

[PDF][Supple][BibTeX] |

| |

Reconstructing 3D objects in natural environments requires solving the ill-posed problem of geometry, spatially-varying material, and lighting estimation. As such, many approaches impractically constrain to a dark environment, use controlled lighting rigs, or use few handheld captures but suffer reduced quality. We develop a method that uses just two smartphone exposures captured in ambient lighting to reconstruct appearance more accurately and practically than baseline methods. Our insight is that we can use a flash/no-flash RGB-D pair to pose an inverse rendering problem using point lighting. This allows efficient differentiable rendering to optimize depth and normals from a good initialization and so also the simultaneous optimization of diffuse environment illumination and SVBRDF material. We find that this reduces diffuse albedo error by 25%, specular error by 46%, and normal error by 30% against singleand paired-image baselines that use learning-based techniques. Given that our approach is practical for everyday solid objects, we enable photorealistic relighting for mobile photography and easier content creation for augmented reality.

|

|

|

High-Accuracy Image Formation Model for Coded Aperture Snapshot Spectral Imaging

Lingfei Song, Lizhi Wang, Min H. Kim, Hua Huang

IEEE Transactions on Computational Imaging (TCI)

published in February, 2022 |

[PDF]

[BibTeX] |

| |

Despite advances in display technology, many existing applications rely on psychophysical datasets of human perception gathered using older, sometimes outdated displays. As a result, there exists the underlying assumption that such measurements can be carried over to the new viewing conditions of more modern technology. We have conducted a series of psychophysical experiments to explore contrast sensitivity using a state-of-the-art HDR display, taking into account not only the spatial frequency and luminance of the stimuli but also their surrounding luminance levels. From our data, we have derived a novel surround-aware contrast sensitivity function (CSF), which predicts human contrast sensitivity more accurately. We additionally provide a practical version that retains the benefits of our full model, while enabling easy backward compatibility and consistently producing good results across many existing applications that make use of CSF models. We show examples of effective HDR video compression using a transfer function derived from our CSF, tone-mapping, and improved accuracy in visual difference prediction.

|

|

|

Modelling Surround-aware Contrast Sensitivity for HDR Displays

Shinyoung Yi, Daniel S. Jeon, Ana Serrano, Se-Yoon Jeong, Hui-Yong Kim,

Diego Gutierrez, Min H. Kim

Computer Graphics Forum (CGF)

published in January, 2022 |

[PDF][Suppl.][Dataset]

[BibTeX] |

| |

Despite advances in display technology, many existing applications rely on psychophysical datasets of human perception gathered using older, sometimes outdated displays. As a result, there exists the underlying assumption that such measurements can be carried over to the new viewing conditions of more modern technology. We have conducted a series of psychophysical experiments to explore contrast sensitivity using a state-of-the-art HDR display, taking into account not only the spatial frequency and luminance of the stimuli but also their surrounding luminance levels. From our data, we have derived a novel surround-aware contrast sensitivity function (CSF), which predicts human contrast sensitivity more accurately. We additionally provide a practical version that retains the benefits of our full model, while enabling easy backward compatibility and consistently producing good results across many existing applications that make use of CSF models. We show examples of effective HDR video compression using a transfer function derived from our CSF, tone-mapping, and improved accuracy in visual difference prediction.

|

|

|

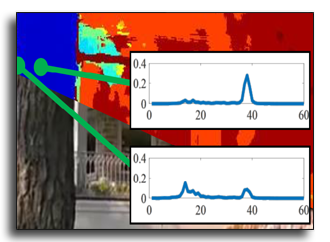

Edge-aware Bi-directional Diffusion for Dense Depth Estimation from Light Fields

Numair Khan, Min H. Kim, James Tompkin

Proc. British Machine Vision Conference (BMVC) 2021

Virtual, November 22nd - 25th, 2021

|

[PDF][Code][BibTeX] |

| |

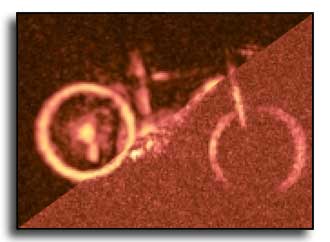

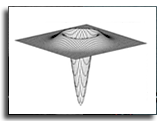

We present an algorithm to estimate fast and accurate depth maps from light fields via a sparse set of depth edges and gradients. Our proposed approach is based around the idea that true depth edges are more sensitive than texture edges to local constraints, and so they can be reliably disambiguated through a bidirectional diffusion process. First, we use epipolar-plane images to estimate sub-pixel disparity at a sparse set of pixels. To find sparse points efficiently, we propose an entropy-based refinement approach to a line estimate from a limited set of oriented filter banks. Next, to estimate the diffusion direction away from sparse points, we optimize constraints at these points via our bidirectional diffusion method. This resolves the ambiguity of which surface the edge belongs to and reliably separates depth from texture edges, allowing us to diffuse the sparse set in a depth-edge and occlusion-aware manner to obtain accurate dense depth maps.

|

|

|

Differentiable Transient Rendering Differentiable Transient Rendering

Shinyoung Yi, Donggun Kim, Kiseok Choi, Adrian Jarabo, Diego Gutierrez, Min H. Kim

ACM Transactions on Graphics (TOG), presented at SIGGRAPH Asia 2021

40(6), Dec. 14 - Dec. 17, 2021 |

[PDF][Supple][Code]

[BibTeX] |

| |

Recent differentiable rendering techniques have become key tools to tackle many inverse problems in graphics and vision. Existing models, however, assume steady-state light transport, i.e., infinite speed of light. While this is a safe assumption for many applications, recent advances in ultrafast imaging leverage the wealth of information that can be extracted from the exact time of flight of light. In this context, physically-based transient rendering allows to efficiently simulate and analyze light transport considering that the speed of light is indeed finite. In this paper, we introduce a novel differentiable transient rendering framework, to help bring the potential of differentiable approaches into the transient regime. To differentiate the transient path integral we need to take into account that scattering events at path vertices are no longer independent; instead, tracking the time of flight of light requires treating such scattering events at path vertices jointly as a multidimensional, evolving manifold. We thus turn to the generalized transport theorem, and introduce a novel \textit{correlated importance} term, which links the time-integrated contribution of a path to its light throughput, and allows us to handle discontinuities in the light and sensor functions. Last, we present results in several challenging scenarios where the time of flight of light plays an important role such as optimizing indices of refraction, non-line-of-sight tracking with nonplanar relay walls, and non-line-of-sight tracking around two corners.

|

|

|

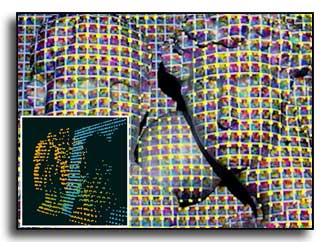

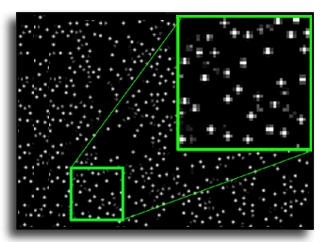

DeepFormableTag: End-to-end Generation and Recognition of Deformable Fiducial Markers DeepFormableTag: End-to-end Generation and Recognition of Deformable Fiducial Markers

Mustafa B. Yaldiz, Andreas Meuleman, Hyeonjoong Jang, Hyunho Ha, Min H. Kim

ACM Transactions on Graphics (TOG), presented at SIGGRAPH 2021

40(4), Aug. 9 - Aug. 13, 2021 |

[PDF][Supple][Code]

[BibTeX] |

| |

Fiducial markers have been broadly used to identify objects or embed messages that can be detected by a camera. Primarily, existing detection methods assume that markers are printed on ideally planar surfaces. The size of a message or identification code is limited by the spatial resolution of binary patterns in a marker. Markers often fail to be recognized due to various imaging artifacts of optical/perspective distortion and motion blur. To overcome these limitations, we propose a novel deformable fiducial marker system that consists of three main parts: First, a fiducial marker generator creates a set of free-form color patterns to encode significantly large-scale information in unique visual codes. Second, a differentiable image simulator creates a training dataset of photorealistic scene images with the deformed markers, being rendered during optimization in a differentiable manner. The rendered images include realistic shading with specular reflection, optical distortion, defocus and motion blur, color alteration, imaging noise, and shape deformation of markers. Lastly, a trained marker detector seeks the regions of interest and recognizes multiple marker patterns simultaneously via inverse deformation transformation. The deformable marker creator and detector networks are jointly optimized via the differentiable photorealistic renderer in an end-to-end manner, allowing us to robustly recognize a wide range of deformable markers with high accuracy. Our deformable marker system is capable of decoding 36-bit messages successfully at ~29 fps with severe shape deformation. Results validate that our system significantly outperforms the traditional and data-driven marker methods. Our learning-based marker system opens up new interesting applications of fiducial markers, including cost-effective motion capture of the human body, active 3D scanning using our fiducial markers' array as structured light patterns, and robust augmented reality rendering of virtual objects on dynamic surfaces.

|

|

|

Single-shot Hyperspectral-Depth Imaging with Learned Diffractive Optics Single-shot Hyperspectral-Depth Imaging with Learned Diffractive Optics

Seung-Hwan Baek, Hayato Ikoma, Daniel S. Jeon, Yuqi Li, Wolfgang Heidrich, Gordon Wetzstein, Min H. Kim

Proc. IEEE International Conference on Computer Vision (ICCV) 2021

Montreal, Canada & Virtual, Oct 11, 2021 – Oct 17, 2021 |

[PDF][Supple][BibTeX] |

| |

Imaging depth and spectrum have been extensively studied in isolation from each other for decades. Recently, hyperspectral-depth (HS-D) imaging emerges to capture both information simultaneously by combining two different imaging systems; one for depth, the other for spectrum. While being accurate, this combinational approach induces increased form factor, cost, capture time, and alignment/registration problems. In this work, departing from the combinational principle, we propose a compact single-shot monocular HS-D imaging method. Our method uses a diffractive optical element (DOE), the point spread function of which changes with respect to both depth and spectrum. This enables us to reconstruct spectrum and depth from a single captured image. To this end, we develop a differentiable simulator and a neural-network-based reconstruction that are jointly optimized via automatic differentiation. To facilitate learning the DOE, we present a first HS-D dataset by building a benchtop HS-D imager that acquires high-quality ground truth. We evaluate our method with synthetic and real experiments by building an experimental prototype and achieve state-of-the-art HS-D imaging results.

|

|

|

Modeling Surround-aware Contrast Sensitivity

Shinyoung Yi, Daniel S. Jeon, Ana Serrano, Se-Yoon Jeong, Hui-Yong Kim,

Diego Gutierrez, Min H. Kim

Proc. Eurographics Symposium on Rendering (EGSR) 2021

Saarbrucken, Germany & Virtual, June 29 - July 2, 2021 |

[PDF][Suppl.][Dataset]

[Slides][Code][BibTeX] |

| |

Despite advances in display technology, many existing applications rely on psychophysical datasets of human perception gathered using older, sometimes outdated displays. As a result, there exists the underlying assumption that such measurements can be carried over to the new viewing conditions of more modern technology. We have conducted a series of psychophysical experiments to explore contrast sensitivity using a state-of-the-art HDR display, taking into account not only the spatial frequency and luminance of the stimuli but also their surrounding luminance levels. From our data, we have derived a novel surroundaware contrast sensitivity function (CSF), which predicts human contrast sensitivity more accurately. We additionally provide a practical version that retains the benefits of our full model, while enabling easy backward compatibility and consistently producing good results across many existing applications that make use of CSF models. We show examples of effective HDR video compression using a transfer function derived from our CSF, tone-mapping, and improved accuracy in visual difference prediction.

|

|

|

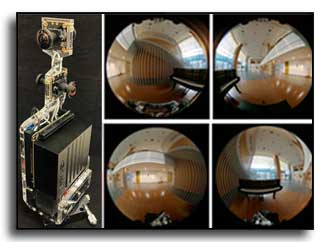

Real-Time Sphere Sweeping Stereo from Multiview Fisheye Images Real-Time Sphere Sweeping Stereo from Multiview Fisheye Images

Andreas Meuleman, Hyeonjoong Jang, Daniel S. Jeon, Min H. Kim

Proc. IEEE Computer Vision and Pattern Recognition (CVPR 2021, Oral)

Nashville, Tennessee, USA, June 19--25, 2021 |

[PDF][video][Supple]

[Code]

[BibTeX] |

| |

A set of cameras with fisheye lenses have been used to capture a wide field of view. The traditional scan-line stereo algorithms based on epipolar geometry are directly inapplicable to this non-pinhole camera setup due to optical characteristics of fisheye lenses; hence, existing complete 360° RGB-D imaging systems have rarely achieved real-time performance yet. In this paper, we introduce an efficient sphere-sweeping stereo that can run directly on multiview fisheye images without requiring additional spherical rectification. Our main contributions are: First, we introduce an adaptive spherical matching method that accounts for each input fisheye camera's resolving power concerning spherical distortion. Second, we propose a fast inter-scale bilateral cost volume filtering method that refines distance in noisy and textureless regions with optimal complexity of O(n). It enables real-time dense distance estimation while preserving edges. Lastly, the fisheye color and distance images are seamlessly combined into a complete 360° RGB-D image via fast inpainting of the dense distance map. We demonstrate an embedded 360° RGB-D imaging prototype composed of a mobile GPU and four fisheye cameras. Our prototype is capable of capturing complete 360° RGB-D videos with a resolution of two megapixels at 29 fps. Results demonstrate that our real-time method outperforms traditional omnidirectional stereo and learning-based omnidirectional stereo in terms of accuracy and performance.

|

|

|

High-Quality Stereo Image Restoration from Double Refraction High-Quality Stereo Image Restoration from Double Refraction

Hakyeong Kim, Andreas Meuleman, Daniel S. Jeon, Min H. Kim

Proc. IEEE Computer Vision and Pattern Recognition (CVPR 2021)

Nashville, Tennessee, USA, June 19--25, 2021 |

[PDF][Video][Code][BibTeX] |

| |

Single-shot monocular birefractive stereo methods have been used for estimating sparse depth from double refraction over edges. They also obtain an ordinary-ray (o-ray) image concurrently or subsequently through additional post-processing of depth densification and deconvolution. However, when an extraordinary-ray (e-ray) image is restored to acquire stereo images, the existing methods suffer from very severe restoration artifacts due to a low signal-to-noise ratio of input e-ray image or depth/deconvolution errors. In this work, we present a novel stereo image restoration network that can restore stereo images directly from a double-refraction image. First, we built a physically faithful birefractive stereo imaging dataset by simulating the double refraction phenomenon with existing RGB-D datasets. Second, we formulated a joint stereo restoration problem that accounts for not only geometric relation between o-/e-ray images but also joint optimization of restoring both stereo images. We trained our model with our birefractive image dataset in an end-to-end manner. Our model restores high-quality stereo images directly from double refraction in real-time, enabling high-quality stereo video using a monocular camera. Our method also allows us to estimate dense depth maps from stereo images using a conventional stereo method. We evaluate the performance of our method experimentally and synthetically with the ground truth. Results validate that our stereo image restoration network outperforms the existing methods with high accuracy. We demonstrate several image-editing applications using our high-quality stereo images and dense depth maps.

|

|

|

NormalFusion: Real-Time Acquisition of Surface Normals for High-Resolution RGB-D Scanning NormalFusion: Real-Time Acquisition of Surface Normals for High-Resolution RGB-D Scanning

Hyunho Ha, Joo Ho Lee, Andreas Meuleman, Min H. Kim

Proc. IEEE Computer Vision and Pattern Recognition (CVPR 2021)

Nashville, Tennessee, USA, June 19--25, 2021 |

[PDF][Supple][Video]

[Code]

[BibTeX] |

| |

Multiview shape-from-shading (SfS) has achieved high-detail geometry, but its computation is expensive for solving a multiview registration and an ill-posed inverse rendering problem. Therefore, it has been mainly used for offline methods. Volumetric fusion enables real-time scanning using a conventional RGB-D camera, but its geometry resolution has been limited by the grid resolution of the volumetric distance field and depth registration errors. In this paper, we propose a real-time scanning method that can acquire high-detail geometry by bridging volumetric fusion and multiview SfS in two steps. First, we propose the first real-time acquisition of photometric normals stored in texture space to achieve high-detail geometry. We also introduce geometry-aware texture mapping, which progressively refines geometric registration between the texture space and the volumetric distance field by means of normal texture, achieving real-time multiview SfS. We demonstrate our scanning of high-detail geometry using an RGB-D camera at ~20 fps. Results verify that the geometry quality of our method is strongly competitive with that of offline multi-view SfS methods.

|

|

|

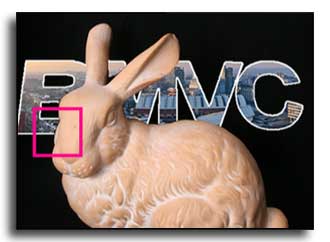

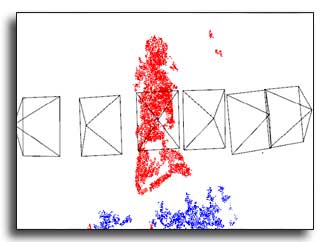

Differentiable Diffusion for Dense Depth Estimation from Multi-view Images Differentiable Diffusion for Dense Depth Estimation from Multi-view Images

Numair Khan, Min H. Kim, James Tompkin

Proc. IEEE Computer Vision and Pattern Recognition (CVPR 2021)

Nashville, Tennessee, USA, June 19--25, 2021 |

[PDF][Video][Code][BibTeX] |

| |

We present a method to estimate dense depth by optimizing a sparse set of points such that their diffusion into a depth map minimizes a multi-view reprojection error from RGB supervision.

We optimize point positions, depths, and weights with respect to the loss by differential splatting that models points as Gaussians with analytic transmittance.

Further, we develop an efficient optimization routine that can simultaneously optimize the 50k+ points required for complex scene reconstruction.

We validate our routine using ground truth data and show high reconstruction quality.

Then, we apply this to light field and wider baseline images via self supervision, and show improvements in both average and outlier error for depth maps diffused from inaccurate sparse points.

Finally, we compare qualitative and quantitative results to image processing and deep learning methods.

|

|

|

View-dependent Scene Appearance Synthesis using Inverse Rendering from Light Fields

Dahyun Kang, Daniel S. Jeon, Hakyeong Kim, Hyeonjoong Jang, Min H. Kim

Proc. IEEE International Conference on Computational Photography (ICCP 2021)

Haifa, Israel, May 23--25, 2021 |

[PDF][Supple][BibTeX] |

| |

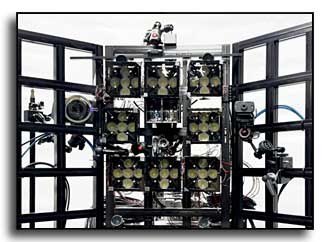

In order to enable view-dependent appearance synthesis from the light fields of a scene, it is critical to evaluate the geometric relationships between light and view over surfaces in the scene with high accuracy. Perfect diffuse reflectance is commonly assumed to estimate geometry from light fields via multiview stereo. However, this diffuse surface assumption is invalid with real-world objects. Geometry estimated from light fields is severely degraded over specular surfaces. Additional scene-scale 3D scanning based on active illumination could provide reliable geometry, but it is sparse and thus still insufficient to calculate view-dependent appearance, such as specular reflection, in geometry-based view synthesis. In this work, we present a practical solution of inverse rendering to enable view-dependent appearance synthesis, particularly of scene scale. We enhance the scene geometry by eliminating the specular component, thus enforcing photometric consistency. We then estimate spatially-varying parameters of diffuse, specular, and normal components from wide-baseline light fields. To validate our method, we built a wide-baseline light field imaging prototype that consists of 32 machine vision cameras with fisheye lenses of 185 degrees that cover the forward hemispherical appearance of scenes. We captured various indoor scenes, and results validate that our method can estimate scene geometry and reflectance parameters with high accuracy, enabling view-dependent appearance synthesis at scene scale with high fidelity, i.e., specular reflection changes according to a virtual viewpoint.

|

|

|

View-consistent 4D Light Field Depth Estimation

Numair Khan, Min H. Kim, James Tompkin

Proc. British Machine Vision Conference (BMVC) 2020

Virtual, September 7--10, 2020

|

[PDF][Video][Code][BibTeX] |

| |

To estimate appearance parameters, traditional SVBRDF acquisition methods require multiple input images to be captured with

various angles of light and camera, followed by a post-processing step. For this reason, subjects have been limited to static

scenes, or a multiview system is required to capture dynamic objects. In this paper, we propose a simultaneous acquisition

method of SVBRDF and shape allowing us to capture the material appearance of deformable objects in motion using a single

RGBD camera. To do so, we progressively integrate photometric samples of surfaces in motion in a volumetric data structure

with a deformation graph. Then, building upon recent advances of fusion-based methods, we estimate SVBRDF parameters in

motion. We make use of a conventional RGBD camera that consists of the color and infrared cameras with active infrared illumination.

The color camera is used for capturing diffuse properties, and the infrared camera-illumination module is employed

for estimating specular properties by means of active illumination. Our joint optimization yields complete material appearance

parameters. We demonstrate the effectiveness of our method with extensive evaluation on both synthetic and real data that

include various deformable objects of specular and diffuse appearance.

|

|

|

Progressive Acquisition of SVBRDF and Shape in Motion

Hyunho Ha, Seung-Hwan Baek, Giljoo Nam, Min H. Kim

Computer Graphics Forum (CGF), presented at Eurographics 2021

published on Aug. 08, 2020.

|

[PDF][Supple1][BibTeX] |

| |

To estimate appearance parameters, traditional SVBRDF acquisition methods require multiple input images to be captured with

various angles of light and camera, followed by a post-processing step. For this reason, subjects have been limited to static

scenes, or a multiview system is required to capture dynamic objects. In this paper, we propose a simultaneous acquisition

method of SVBRDF and shape allowing us to capture the material appearance of deformable objects in motion using a single

RGBD camera. To do so, we progressively integrate photometric samples of surfaces in motion in a volumetric data structure

with a deformation graph. Then, building upon recent advances of fusion-based methods, we estimate SVBRDF parameters in

motion. We make use of a conventional RGBD camera that consists of the color and infrared cameras with active infrared illumination.

The color camera is used for capturing diffuse properties, and the infrared camera-illumination module is employed

for estimating specular properties by means of active illumination. Our joint optimization yields complete material appearance

parameters. We demonstrate the effectiveness of our method with extensive evaluation on both synthetic and real data that

include various deformable objects of specular and diffuse appearance.

|

|

|

Image-Based Acquisition and Modeling of Polarimetric Reflectance Image-Based Acquisition and Modeling of Polarimetric Reflectance

Seung-Hwan Baek, Tizian Zeltner, Hyun Jin Ku, Inseung Hwang, Xin Tong, Wenzel Jakob, Min H. Kim

ACM Transactions on Graphics (TOG), presented at SIGGRAPH 2020

39(4), Jul. 19 - Jul. 23, 2020

|

[PDF][Supple1][Supple2]

[Supple3][BibTeX] |

| |

Realistic modeling of the bidirectional reflectance distribution function (BRDF) of scene objects is a vital prerequisite for any type of physically based rendering. In the last decades, the availability of databases containing real-world material measurements has fueled considerable innovation in the development of such models. However, previous work in this area was mainly focused on increasing the visual realism of images, and hence ignored the effect of scattering on the polarization state of light, which is normally imperceptible to the human eye. Existing databases thus only capture scattered flux, or they are too directionally sparse (e.g.,~in-plane) to be usable for simulation.

While subtle to human observers, polarization is easily perceived by any optical sensor (e.g., using polarizing filters), providing a wealth of additional information about shape and material properties of the object under observation. Given the increasing application of rendering in the solution of inverse problems via analysis-by-synthesis and differentiation, the ability to realistically model polarized radiative transport is thus highly desirable.