ACM SIGGRAPH Asia 2023 |

| |

| Self-Calibrating, Fully Differentiable NLOS Inverse Rendering |

| |

| |

|

|

|

|

|

|

| |

Kiseok Choi |

|

Inchul Kim |

|

Dongyoung Choi |

|

| |

Julio Marco |

|

Diego Gutierrez |

|

Min H. Kim |

|

| |

| |

KAIST |

|

Universidad de Zaragoza - I3A |

| |

| |

|

|

| |

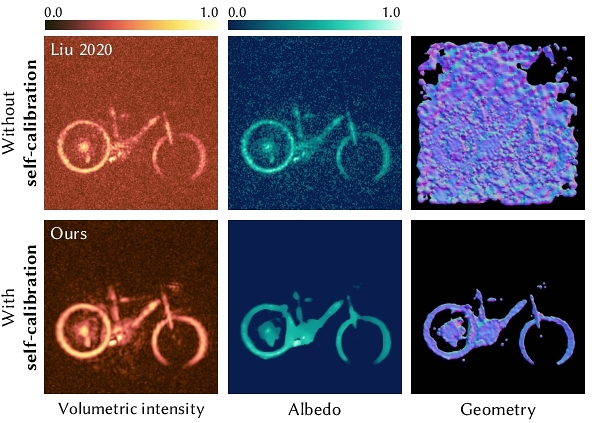

We present a self-calibrating, fully-differentiable NLOS inverse rendering pipeline for the reconstruction of hidden scenes. Our method only requires transient measurements as input and relies on differentiable rendering and implicit surface estimation from NLOS volumetric outputs to obtain the optimal NLOS imaging parameters that yield accurate surface points, normals, and albedo reconstructions of the hidden scene. The top row shows the reconstructed volumetric intensity, albedo, and 3D geometry of a real scene [Liu et al. 2020], failing to reconstruct geometry estimation due to noise interference. The bottom row demonstrates our results after optimization of the imaging parameters. |

|

|

| |

|

|

| |

|

|

| |

|

| |

Abstract |

| |

|

| |

Existing time-resolved non-line-of-sight (NLOS) imaging methods reconstruct hidden scenes by inverting the optical paths of indirect illumination measured at visible relay surfaces. These methods are prone to reconstruction artifacts due to inversion ambiguities and capture noise, which are typically mitigated through the manual selection of filtering functions and parameters. We introduce a fully-differentiable end-to-end NLOS inverse rendering pipeline that self-calibrates the imaging parameters during the reconstruction of hidden scenes, using as input only the measured illumination while working both in the time and frequency domains. Our pipeline extracts a geometric representation of the hidden scene from NLOS volumetric intensities and estimates the time-resolved illumination at the relay wall produced by such geometric information using differentiable transient rendering. We then use gradient descent to optimize imaging parameters by minimizing the error between our simulated time-resolved illumination and the measured illumination. Our end-to-end differentiable pipeline couples diffraction-based volumetric NLOS reconstruction with path-space light transport and a simple ray marching technique to extract detailed, dense sets of surface points and normals of hidden scenes.We demonstrate the robustness of our method to consistently reconstruct geometry and albedo, even under significant noise levels.

|

| |

|

| |

|

| |

@InProceedings{Choi:SIGGRAPHAsia:2023,

author = {Kiseok Choi and Inchul Kim and Dongyoung Choi and Julio Marco

and Diego Gutierrez and Min H. Kim},

title = {Self-Calibrating, Fully Differentiable NLOS Inverse Rendering},

booktitle = {Proceedings of ACM SIGGRAPH Asia 2023},

month = {December},

year = {2023},

}

|

|

|

|

|

|

|

Hosted by Visual Computing Laboratory, School of Computing, KAIST.

|

|

|