Computer Vision and Pattern Recognition (CVPR 2022) |

| |

| Uniform Subdivision of Omnidirectional Camera Space for Efficient Spherical Stereo Matching |

| |

| |

Donghun Kang |

Hyeonjoong Jang |

Jungeon Lee |

Chong-Min Kyung |

Min H. Kim |

|

| |

| |

KAIST |

Hyundai Motor Company |

|

| |

| |

|

| |

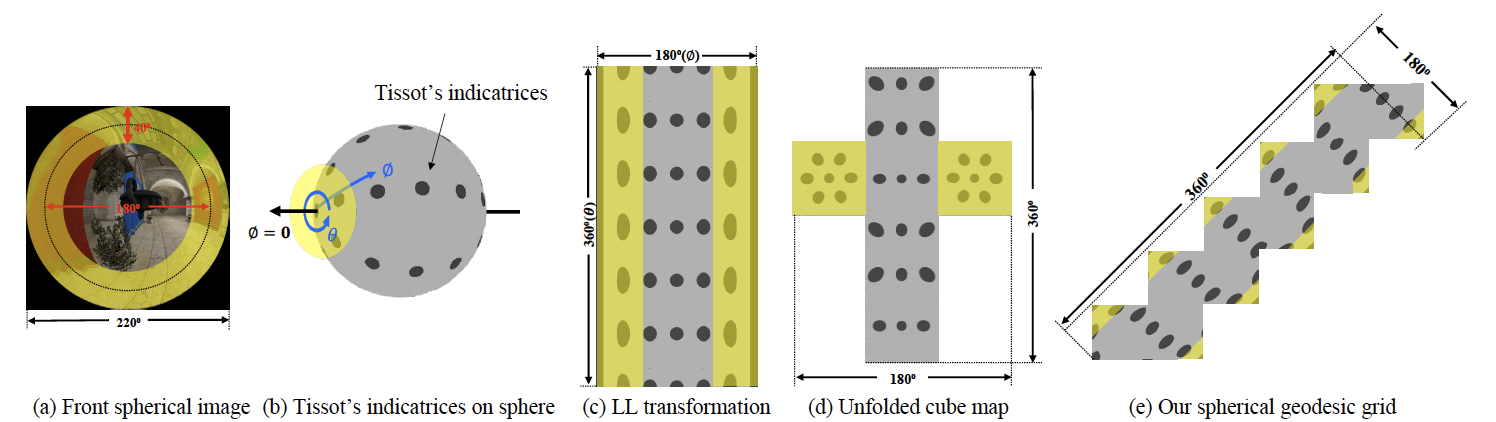

Figure 1. Geometric transformation options of a fisheye image. (a) Fisheye image. For all geometric domains with Tissot’s indicatrices that indicate the same area size of patches: (b) the spherical domain in 3D, (c) LL-transformed 2D image (equivalent to the rotated equirectangular image), (d) unfolded cube map, and (e) our proposed spherical geodesic grid. Our geometric transform provides a more regularized transform of Tissot’s indicatrices. Note that the regions marked with yellow color indicate the extreme image distortion near poles. |

|

| |

|

|

| |

|

| |

Abstract |

| |

|

| |

Omnidirectional cameras have been used widely to better understand surrounding environments. They are often configured as stereo to estimate depth. However, due to the optics of the fisheye lens, conventional epipolar geometry is inapplicable directly to omnidirectional camera images. Intermediate formats of omnidirectional images, such as equirectangular images, have been used. However, stereo matching performance on these image formats has been lower than the conventional stereo due to severe image distortion near pole regions. In this paper, to address the distortion problem of omnidirectional images, we devise a novel subdivision scheme of a spherical geodesic grid. This enables more isotropic patch sampling of spherical image information in the omnidirectional camera space. By extending the existing equal-arc scheme, our spherical geodesic grid is tessellated with an equal-epiline subdivision scheme, making the cell sizes and in-between distances as uniform as possible, i.e., the arc length of the spherical grid cell’s edges is well regularized. Also, our uniformly tessellated coordinates in a 2D image can be transformed into spherical coordinates via oneto- one mapping, allowing for analytical forward/backward transformation. Our uniform tessellation scheme achieves a higher accuracy of stereo matching than the traditional cylindrical and cubemap-based approaches, reducing the memory footage required for stereo matching by 20%.

|

| |

|

| |

|

| |

@InProceedings{Kang_2022_CVPR,

author = {Donghun Kang and Hyeonjoong Jang and Jungeon Lee

and Chong-Min Kyung and Min H. Kim},

title = {Uniform Subdivision of Omnidirectional Camera Space

for Efficient Spherical Stereo Matching},

booktitle = {IEEE Conference on Computer Vision and

Pattern Recognition (CVPR)},

month = {June},

year = {2022}

}

|

|

|

|

|

|

|

Hosted by Visual Computing Laboratory, School of Computing, KAIST.

|

|

|