| |

GMV: Graphic Modeling and Visualization |

| |

|

| |

- Useful links:

|

| |

|

| |

- FAQ:

NB This is an unofficial FAQ page. In our course work, the report is more important rather than just composing code. Please show your own insight for the task through your report. Thank you. |

| |

|

| |

- This is my first time to compile OpenGL stuff in a machine, what kind of library do I need for our course work?

You need a GLUT library with our course. You can download its source code from their own web-site (see the above list, useful links). When you finish to compile lib files, you can install the libraries in two different ways.

The first way for our college machine is:

1. Compile libraries.

2. Copy libraries and headers into "GlutGlui" folder into "Visual Studio 2005" folder in your "My Documents" folder.

3. Start Visual studio 2005.

4. Go to "Tools > Options" menu.

5. Go to "Project and Solutions > VC++ Directories".

6. Select <Include files> in "Show directories for".

7. Add include path of the files that you uncompressed, for example, "C:\User\minhkim\My Documents\Visual Studio 2005\GlutGlui\include". Note that you should not include the subdirectory "GL" in there.

8. Then, select <Library files> in "Show directories for"

9. Add library path of the files that you uncompressed, for example, "C:\User\minhkim\My Documents\Visual Studio 2005\GlutGlui\lib".

10. For deploying your compiled application to another machine, you probably need to copy glut32.dll into same directory where your executable file exists.

The second way for your laptop machine is:

1. Compile libraries.

2. Copy "glut32.dll" to "C:\Windows\System32"

3. Copy "include\GL" to "C:\Program Files\Microsoft Visual Studio 8\VC"

4. Copy "glut32.lib" to "C:\Program Files\Microsoft Visual Studio 8\VC\lib"

- Can I compile OpenGL codes for UNIX by using Microsoft Visual Studio?

Technically, Yes. Since MS released VS2003, they have tried to compromise standard C/C++ syntax. Therefore, if you are not using VS6.0 (based on MS-own C/C++ language), you can compile any OpenGL codes by MS Visual studio possibly with minor modification.

- How can I start to compile C++ codes in VS2005 when I have some C/C++ codes from UNIX source code?

The easiest way to do it is:

(1) Go to File menu

(2) Select New > Project from existing code

(3) Choose type, Visual C++, then press Next

(4) Type your own location of UNIX code files

(5) Define your own project name, then press Next

(6) Select console application project, then press Next twice.

(7) Press Finish

- I met some compiling errors with OpenGL codes (UNIX) through VS2005, how can I solve it?

I cannot give you 100% solution though, some of these below are working with our college codes.

(1) Change #include<iostream.h> into #include<iostream> (the former is old-fashion; the latter is up-to-date). Then add using namespace std; below.

(2) Add #include<stdlib.h> in front of #include<GL/glut.h> if you meet some error regarding exit function.

- How can I debug the program with our data file?

Define input argument in our project property. In Project menu, select Property of your project. Then, go to Configuration properties > Debugging, and input Command Arguments like below:

< complexscene.dat

Then press OK. Now when you press <F7> (compiling) then <F5> (debugging) you will be able to see the program run with the specific data file. You can check the situation of each variable in your procedures. That will become real help for your coding. ;o)

- Can I compile the UNIX code in Cygwin?

Yes, you can. However, you need to change library input arguments. For example (ray tracing),

g++ -c colour.cpp -o colour.o

g++ -c light.cpp -o light.o

g++ -c litscene.cpp -o litscene.o

g++ -c material.cpp -o material.o

g++ -c point.cpp -o point.o

g++ -c polygon.cpp -o polygon.o

g++ -c ray.cpp -o ray.o

g++ -c scene.cpp -o scene.o

g++ -c simplecamera.cpp -o simplecamera.o

g++ -c sphere.cpp -o sphere.o

g++ -c vector.cpp -o vector.o

g++ -c mainray.cpp -o mainray.o

g++ -c main.cpp -o main.o

g++ colour.o material.o point.o ray.o sphere.o polygon.o vector.o scene.o simplecamera.o light.o litscene.o mainray.o -lglut32 -lglu32 -lopengl32 -o ray

NB, you must install OpenGL package under Graphics in Cygwin, and also you must run the compiled application not in Cygwin command line, but in Windows command line, because we linked Windows library in this case.

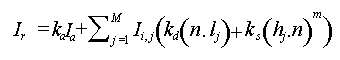

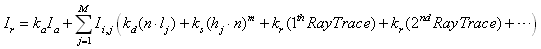

- How can I calculate local illumination?

<Hint> To compute local illumination, we need this equation.

where H = ( E + L ) / | E + L | (E & L are normalized)

The first term shows ambient light reflection; the second term presents summation of reflected local light sources. In side of the second term, the first term is for Lambertian diffuse light (dot product); the second term is specular light by Phong shading. See our lecture notes for more detail. :o)

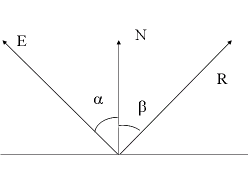

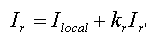

- How can I calculate reflection direction for recursive ray tracing?

<Hint> The direction calculation is:

where R = -E + 2(N.E)N (N & E are normalized)

Now, it can be the new direction of reflected light.

where kr = ks (specular reflectance).

The same situation (like local illumination) happens again in reflected light iteratively (probably four time in our case). That is the hint for the ray tracing. See our lecture notes for more detail. :o)

- There are many scene files. Which scene do I have better use?

complexscene.dat has two point light source, which is more reasonable situation for rendering. We recommend this rather than simplescene.dat.

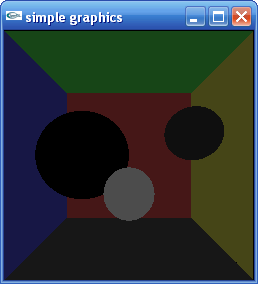

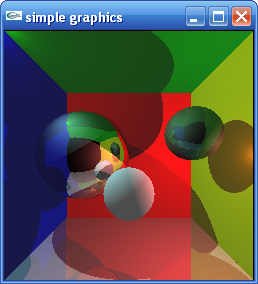

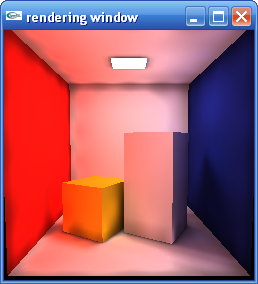

- How does each step of rendering look like?

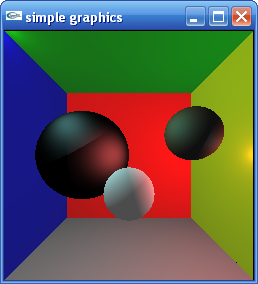

Well if you run your code with complexscene.dat, they might look like below:

|

|

|

| (1) Just Ambient light |

|

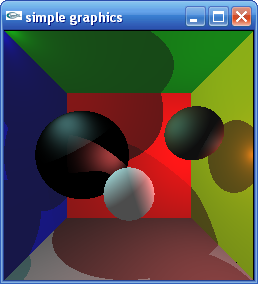

(2) Ray Casting (Ambient + Diffuse + Specular light) |

|

|

|

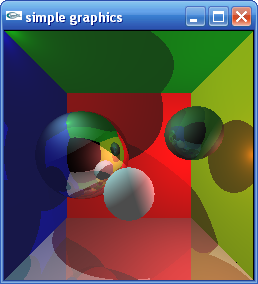

| (3) Ray Casting with simple shadow |

|

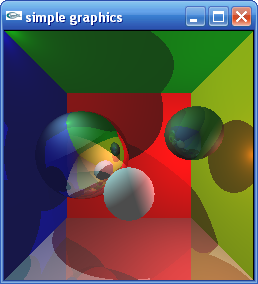

(4) Local Illumination + Recursive Ray Tracing |

NB These results are computed by my code, which means it cannot guarantee 100% correct answer. :o) It is possible you may be able to get something different. If you find something wrong. please let me know. Thank you.

- How can I get proper shapes of polygons in recursive ray tracing in our complexscene.dat?

If you cannot get the black square (showing camera side) on objects in ray tracing, you need to modify two files ( polygon.cpp and gobject.h ) in our college source code set. See the difference as below.

|

|

|

| wrong |

|

correct (final proper result of ray tracing with complexscene.dat) |

Solution is:

(1)

Add one line at the end of function, Polygon::Polygon(Material mat, int m) in polygon.cpp

setType( 1 );

(2) Modify the last line of function, bool Polygon::intersect(Ray ray, float& t, Colour& colour) in polygon.cpp

from

return (test%2 ==0);

to

return ((test==-N)||(test==N));

(3) In declaration of class GObject in gobject.h, add an int variable as below:

int type; // 0 = sphere, 1 = polygon

(4) Add two public functions in the GObject class in gobject.h as below:

int getType() { return type; }

void setType( int itype ) { type = itype; }

- I'm trying to compile our local illumination example (e.g. question.zip) by using gcc ver. 4.1.2. I met some error message in compiling sphere.h file. How can I solve it?

I just realized that our code cannot be compiled properly in newly gcc compiler (e.g. ver. 4.1.2) because the minus operator of Vector class conflict with standard operator. If you are programming our coursework in UNIX, I recommend you not to use the minus operator of Vector class to avoid the error. Please use the below instead of 'minus' operator.

instead of:

Vector v = p1 - p0;

use this:

Vector v = Vector( p1.x() - p0.x(), p1.y() - p0.y(), p1.z() - p0.z() );

I fixed some other error and upload the patched question.zip which renders just ambient light on three spheres.

- What should I compute to make Local Illumination on each object?

<Hint> First of all, you always need three (sometimes two) vectors to calculate reflection of light on objects. At the point P of the object, which is pointed by a ray from the eye (COP), you need to compute (1) normalized eye vector E (from eye point to object P), (2) surface normal N at point P, and (3) normalized light vector vL (from the object point P to light point L). Then, we can calculate Lambertian diffuse, Phong specularity, Reflection direction by using these vectors and intensity ||L|| of light vector.

- How can I calculate Shadow?

<Hint> At the object point P, which is pointed by a ray from the eye (COP), you need to test whether light vector vL is intersected by any other objects. Be careful with normalization.

- How can I calculate Recursive Ray Tracing?

<Hint> At the object point P, which is pointed by a ray from the eye (COP), you also need to calculate reflected light from other objects iteratively. Now the light source of that is changed from light source vL into reflected light vR from other object (which is originated from the light source vL). And also the eye vector E should be changed from the eye-pointing vector into a vector from a point P to a reflection point. The energy from reflected light vR should be scaled by reflectance of specularity at point P and be added on the computed local illumination of the point P (see FAQ 8 and our lecture note). Our equation can be rewritten in this different way (see FAQ 8 and our lecture note for detail):

- My calculation of vectors might be wrong???

First, check the products of vectors. Even though vector has same data structure like scalar, vector represents some direction assuming unit vectors. Also don't forget that dot product of vectors becomes a scalar, but cross product of vectors becomes a vector following right-hand rule.

- How can I utilize function or variable in a class from outside of a different class in C++?

If you have a defined class A, its member variable a, and member function f,

you can call a by using A.a and f through A.f(),

If you have a defined class pointer pA,

you can call a by using pA->a and f through pA->f().

- How can I debug some 3D data in C++?

The easiest way to do it, use cout << of interesting variables at each stage.

For instance,

cout << vert[poly[i][0]][0] << " " << vert[poly[i][0]][1] << " " << vert[poly[i][0]][2] << endl;

and don't forget to include the below in the head of your source, :o)

#include <iostream>

using namespace std;

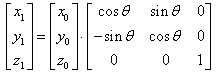

- How can I compute rotation of each vertices about z axis?

Use this matrix:

* Don't forget that all the symmetric matrices should be add in the direction from the left to the right and that sin and cos function take radius, e.g., 360 degree = 2 × π.

* You need to define π in the head of your code by yourself :

#ifndef PI

#define PI 3.141592653589793

#endif

- How to define a polygon by using vertices

Just take four vertices (two from a slice and two from another slice).

e.g.,

1 polygon = [ V(i)(ang), V(i)(ang+delta_ang), V(i+1)(ang + delta_ang), V(i+1)(ang) ];

- How to compute a normal of a polygon?

Just take two vectors in a polygon and compute cross product of them following right-hand rule. Beware the direction of the normal!!!

e.g., the computation of cross product will follow:

a = (1,2,3)

b = (4,5,6)

a x b = (1,2,3) × (4,5,6) = { (2 × 6 - 3 × 5), - (6 × 1 - 4 × 3), (1 × 5 - 2 × 4) } = (-3, 6, -3)

// compute normal

normpoly[pp][0] = v0[1]*v1[2] - v0[2]*v1[1]; // X

normpoly[pp][1] = v0[2]*v1[0] - v0[0]*v1[2]; // Y

normpoly[pp][2] = v0[0]*v1[1] - v0[1]*v1[0]; // Z

- How can I make multi-dimensional matrix dynamically in C++?

If you want to load some images or geometry as matrix into memory space (e.g, 100x200x3), you firstly need to make a pointer for the matrix and allocate memory before you utilize the data in your program. You probably can declare an multi-dimensional array as below:

float mat[100][200][3];

stack memory which usually can contain small amount of memory. However, we cannot change the size of the matrix dynamically in the following dynamic manor.

int i = 100;

int j = 200;

int k = 3;

float mat[i][j][k];

You probably will meet some errors when you compile it. The first usage of memory is very experimental situation because all images or data have different sizes, and also they need to be adjusted dynamically. We sometimes need to allocate large memory dynamically like MATLAB in practice. But how?

Standard C/C++ library provides malloc and calloc function for dynamic memory usage, which is allocated in heap memory that can contains large memory.

But technically basic C/C++ library does not provide matrix data structure (like 2D or 3D) in nature, which only serve array structure of 1D. There are two solutions: one is to use extra library like CBLAS or the most straightforward solution is to utilize multi-level pointer even though it is a bit tricky.

e.g. (2D matrix of single float of nrow-by-ncol),

<memory declaration>

typedef double DEPTH[3]; // e.g., red, green, blue

DEPTH **matrix;

<memory dynamic allocation>

matrix = (DEPTH **) malloc (nrow * sizeof (DEPTH *));

for (int i = 0; i < nrow; i++)

matrix[i] = (DEPTH *) malloc (ncol * sizeof (DEPTH));

<memory usage>

matrix[r][c][d] = something;

<memory vacation>

for(int i=0; i<nrow; i++)

free(matrix[i]);

free (matrix);

// Beware the order

* Now you can deal with dynamic multi-dimensional data in C/C++. ;o)

- What does it look like the result of the boundary representation task (e.g., wine glass)?

* 3D result: # of interpolated angles is 60, # of polygons is 540 (60 × 9)

** NB Don't forget to generate the last series of polygons between the start and end points.

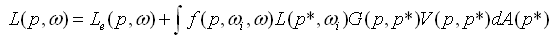

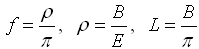

- How can we calculate Radiosity?

We need to remind the Radiance equation first. The radiosity calculate global illumination of only perfectly diffused reflection, while the ray tracing takes into account global illumination of specular-to-specular reflection. The radiosity is calculated:

in assumption that all the surfaces perfectly diffuse all the reflection without specular,

we can apply following equations to the above:

Then, finally we can get the radiosity equation by replacing some of them:

Finally the G'(p, p*)V(p,p*) can be calculated by the hemicube method.

- Why do we have two windows in the runtime of our radiosity code?

The small one shows you the Hemi-cube Approximation to compute Delta Form-Factors, which consider Geomatric relationship of two patches in terms of angle and distance G'(p, p*) and Visibility including shadow V(p,p*). These things are calculated by OpenGL Z-buffer and displayed in a continuous sequence of five surfaces of the hemicube (one full-sized face and four half-sized faces).

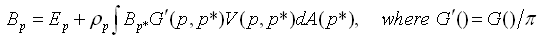

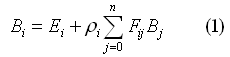

- How can we solve the Radiosity equation?

There are two ways to calculate radiosity: Gathering or Shooting (Progressive Refinement).

In the Gathering method, we can implement the radiosity equation through Gauss-Seidel method (any linear solver).

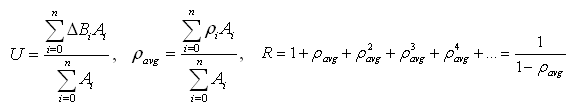

Eq.1 can be rewritten in this manner below:

Now we can solve it through any linear equation solver.

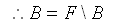

In the shooting method, the radiosity is distributed from a surfaces in inverse direction.

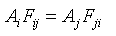

By using reciprocity relation of Form factors:

we finally can get this:

Shooting method saves computational cost and memory a lot (our code is doing it).

- In our Radiosity codes, where can we find out relevant data for computation of radiosity?

Classically the data structures are defined in header files. We only have one header file "rad.h". It will tell you something important information. Then, about the room setting, see "room.c" file. In particular, you may be interested to see some variables, e.g., "params->nPatches", "params->patches[].area", "params->patches[].unshotRad.samples[]", "params->patches[].reflectance->sample[k]", "kNumberOfRadSamples", "ambient->samples[]".

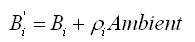

- How can we compute ambient term?

Our lecture notes give you some solution. When we run the first iteration of our codes, whole amount of energy in a light source is distributed to all the surfaces in the room. Then, the distributed energy on each surfaces will travel to another surfaces in next coming iterations. The ambient term will be computed by the product of infinite inter-reflection of averaged reflectance and averaged unshot energy at the first iteration.

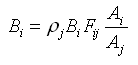

The calculation may follow:

The computed ambient term will be used in this manner:

- What are patch and element (substructuring)?

Firstly we need to create a coarse patch mesh and compute radiosity on each patch mesh. Then, find areas of high radiosity gradient. The patches are subdivided into elements, and radiosity values are computed on each elements from the patch's radiosity values.

|

|

- How can we make smooth shading?

Our basic code calculates colours per each element (not each vertex). That means there is no interpolation within each element. So if we calculate colour values per each vertex by averaging neighboring elements (one, two, three, and four), we can get some smooth interpolation within elements. You may want to build two more arrays: one for color of each vertex, one for number of neighboring elements)...

|

|

|

Before |

|

After |

|

| |

"Out of clutter find simplicity"

- The first of Three Rules of Work, A.Einstein.

© 2007 Min H. Kim |

|

|

|

|

Hosted by Visual Computing Laboratory, School of Computing, KAIST.

|

|

|