Logistics

You can download the raw material from KLMS.

Part 1: Questions

-

Code template in

code/. -

Writeup template in

writeup/.

Part 2: Code

-

Writeup template: In the zip: writeup/

-

Required files: code/, writeup/writeup.pdf

-

Don’t add more files in code/ directory. Autograder use only the codes in your 'hw4_functions.py' file.

Submission

|

QnA

-

Please use KLMS QnA board, and title of the post like: [hw4] question about ~

-

If possible (not mandatory), please post the question as opened and english since other student may have same question.

-

Note that TAs are trying to answer question ASAP, but it may take 1~2 day on weekend. Please post the question on weekday if possible.

-

TA in charge: Hyeongjun Cho <hjcho@vclab.kaist.ac.kr>

Overview

We will create a local feature matching algorithm (Szeliski Chapter 4.1 [1st ed.] / Chapter 7.1 [2nd ed.]) and attempt to match multiple views of real-world scenes. There are hundreds of papers in the computer vision literature addressing each stage. We will implement a simplified version of SIFT.

Task: Implement the three major steps of local feature matching:

-

Detection in

get_interest_points(). Please implement the Harris corner detector. You do not need to worry about scale invariance or keypoint orientation estimation for your Harris corner detector. -

Description in

get_descriptors(). Please implement a SIFT-like local feature descriptor (Szeliski 4.1.2 [1st ed.] / 7.1.2 [2nd ed.]). You do not need to implement full SIFT! Add complexity until you meet the rubric. -

Matching in

match_features(). Please implement the "nearest neighbor distance ratio test" method of matching local features (Szeliski 4.1.3 [1st ed.] / 7.1.3. [2nd ed.]; equation 4.18 [1st ed.] / 7.18 [2nd ed.] in particular).

Forbidden functions

Direct Numpy, SciPy, scikit, OpenCV functions for:

-

image gradient (e.g.,

cv2.Sobel()) -

corner/feature detection

-

feature description

-

distance/similarity calculation

-

k-nearest neighbor

-

KD-tree

Potentially Useful:

cv2.filter2D(), skimage.measure.label(), np.argsort(), np.arctan2().

Notes

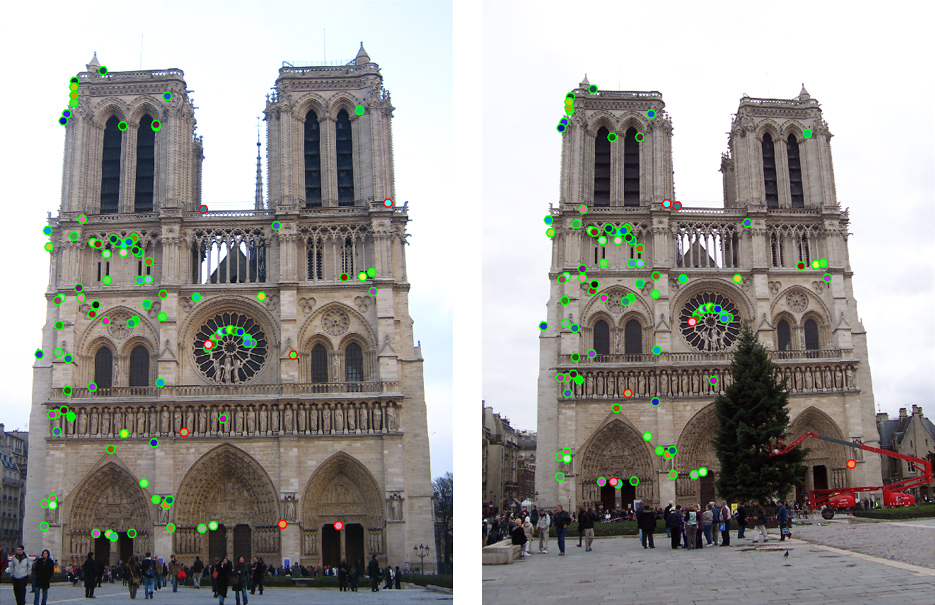

main.py handles files, visualization, and evaluation, and calls placeholders of the three functions to implement. Running the starter code without modification will visualize random interest points matched randomly on the Notre Dame images. The correspondence will be visualized with show_correspondence().

The Notre Dame, Mount Rushmore, and Episcopal Gaudi image pairs include ground truth evaluation. evaluate_correspondence() will classify each match as correct or incorrect based on hand-provided matches.

As you implement your feature matching pipeline, check whether your performance increases using evaluate_correspondence(). Take care not to tweak parameters specifically for the initial Notre Dame image pair. We provide additional image pairs in extra data (available in KLMS), which exhibit more viewpoint, scale, and illumination variation. With careful consideration of the qualities of the images on display, it is possible to match these, but it is more difficult.

An Implementation Strategy

-

Use

cheat_interest_points()instead ofget_interest_points(). This function will only work for the 3 image pairs with ground truth correspondence. This function cannot be used in your final implementation. It directly loads the 100 to 150 ground truth correspondences for the test cases. Even with this cheating, your accuracy will initially be near zero because the features are random and the matches are random. -

Implement

match_features(). Accuracy should still be near zero because the features are random. -

Change

get_descriptors()to cut out image patches. Accuracy should increase to ~40% on the Notre Dame pair if you’re using 16x16 (256 dimensional) intensity patches as your feature. Accuracy on the other test cases will be lower (Mount Rushmore 25%, Episcopal Gaudi 7%). Image patches are not invariant to brightness change, contrast change, or small spatial shifts, but this provides a baseline. -

Finish

get_descriptors()by implementing a SIFT-like feature. Accuracy should increase to ~50% on the Notre Dame pair, ~40% on Mount Rushmore, and ~10% on Episcopal Gaudi. These accuracies still aren’t great because the human-selected correspondences might look quite different at the local feature level. You should notice that your more confident matches are more likely to be true matches—these pass the ratio test more easily. -

Stop cheating (!) and implement

get_interest_points(). Harris corners aren’t as good as ground-truth points (which we know to correspond), so accuracy may drop. On the other hand, you can get hundreds or even a few thousand interest points, so you have more opportunities to find confident matches. If you only evaluate the most confident 100 matches on the Notre Dame pair, you should be able to achieve 90% accuracy (see themaxPtsEvalparameter).

Writeup

Describe your process and algorithm, show your results, and tell us any other information you feel is relevant. We provide a LaTeX template writeup/writeup.tex. Please compile it into a PDF and submit it along with your code. Also, your report should include following informations.

-

Quantitatively compare the impact of the method you’ve implemented. For example, using SIFT-like descriptors instead of normalized patches increased our performance from 70% good matches to 90% good matches.

-

Show how well your method works on the Notre Dame, Mount Rushmore, and Episcopal Gaudi image pairs—use

eval.jpgas generated by the starter code.

Rubric

If your implementation reaches 80% accuracy on your most confident 100 correspondences for the Notre Dame pair, then you will receive full code credit.

Time limit: 20 minutes is your time limit. If your process over time limit, then autograder will kill your process. You must write efficient code—think before you write.

-

Hint 1: Use 'steerable' (oriented) filters to build the descriptor.

-

Hint 2: Use matrix operations for feature descriptor matching.

We will test your code by PC with Intel® Core™ i9-12900K CPU @ 3.19GHz. Installed, it has Numpy, SciPy, scikit-image, OpenCV, matplotlib.

-

[+20 pts]: Implementation of Harris corner detector in

get_interest_points() -

[+30 pts]: Implementation of SIFT-like local feature in

get_descriptors() -

[+10 pts]: Implementation of matching in

match_features() -

[+20 pts]: Written questions.

-

[+20 pts]: Writeup.

There is no extra credit in this homework.

Credits

The Python version of this project are revised by Dahyun Kang, Inseung Hwang, Donggun Kim, Jungwoo Kim and Min H. Kim. The original assignment developed by James Hays and James Tompkin.